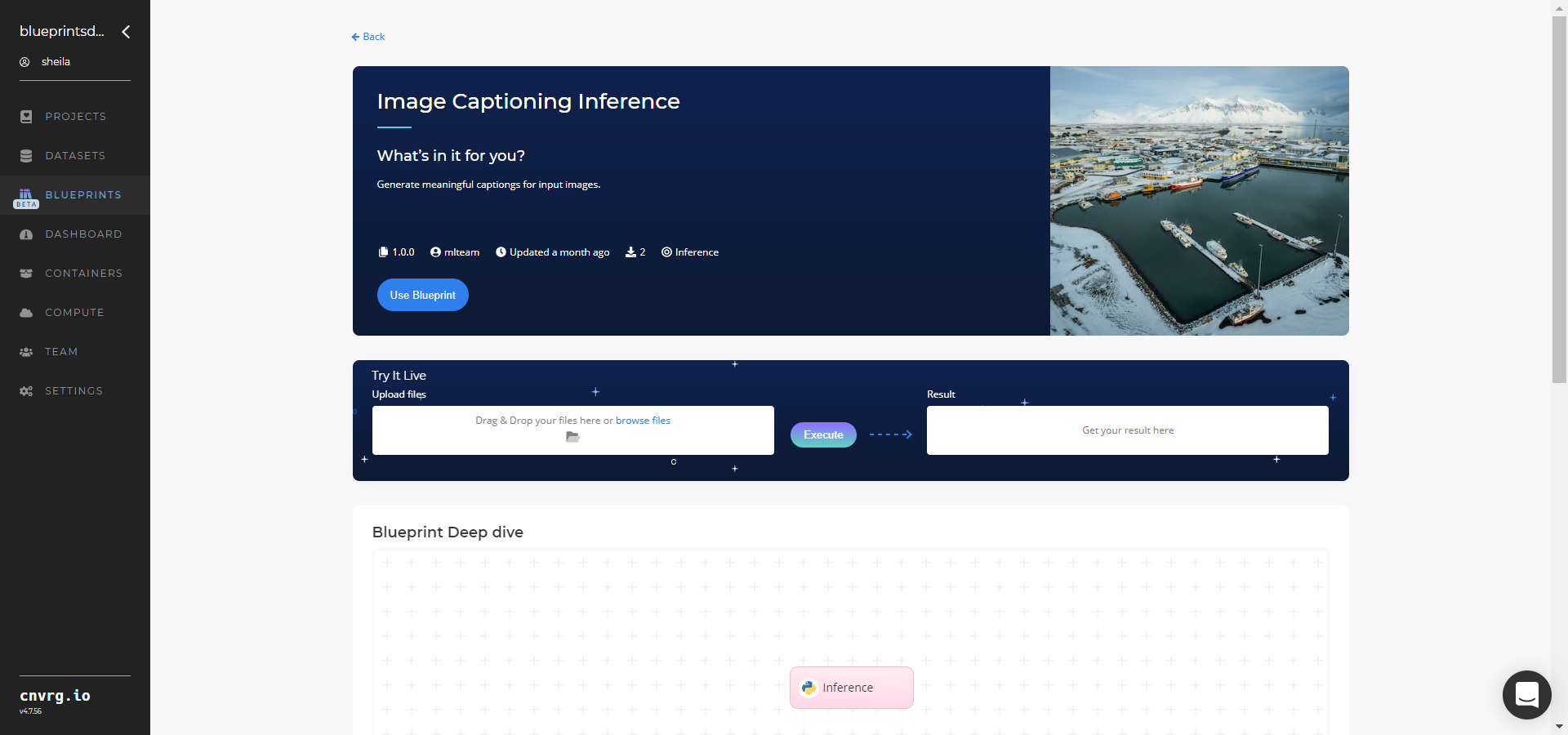

# Image Captioning AI Blueprint- deprecated from 11/2024

# Inference

Image captioning uses neural networks to generate textual descriptions for images. It utilizes both computer vision and natural language processing (NLP) to generate these descriptions.

# Purpose

Use this inference blueprint to immediately generate descriptive captions for images. To use this pretrained caption-generator model, create a ready-to-use API-endpoint that can be quickly integrated with your data and application.

This blueprint deploys an OFA-pretrained image captioning model that returns a caption for an image provided. The user can provide single or multiple images and the blueprint returns captions for the images in the same order as the JSON response(s).

# Instructions

NOTE

The minimum resource recommendations to run this blueprint are 3.5 CPU and 14.5 GB RAM or AWS xlarge memory on-demand instance.

NOTE

This blueprint’s performance can benefit from using GPU as its compute.

Complete the following steps to deploy this caption-generator endpoint:

- Click the Use Blueprint button.

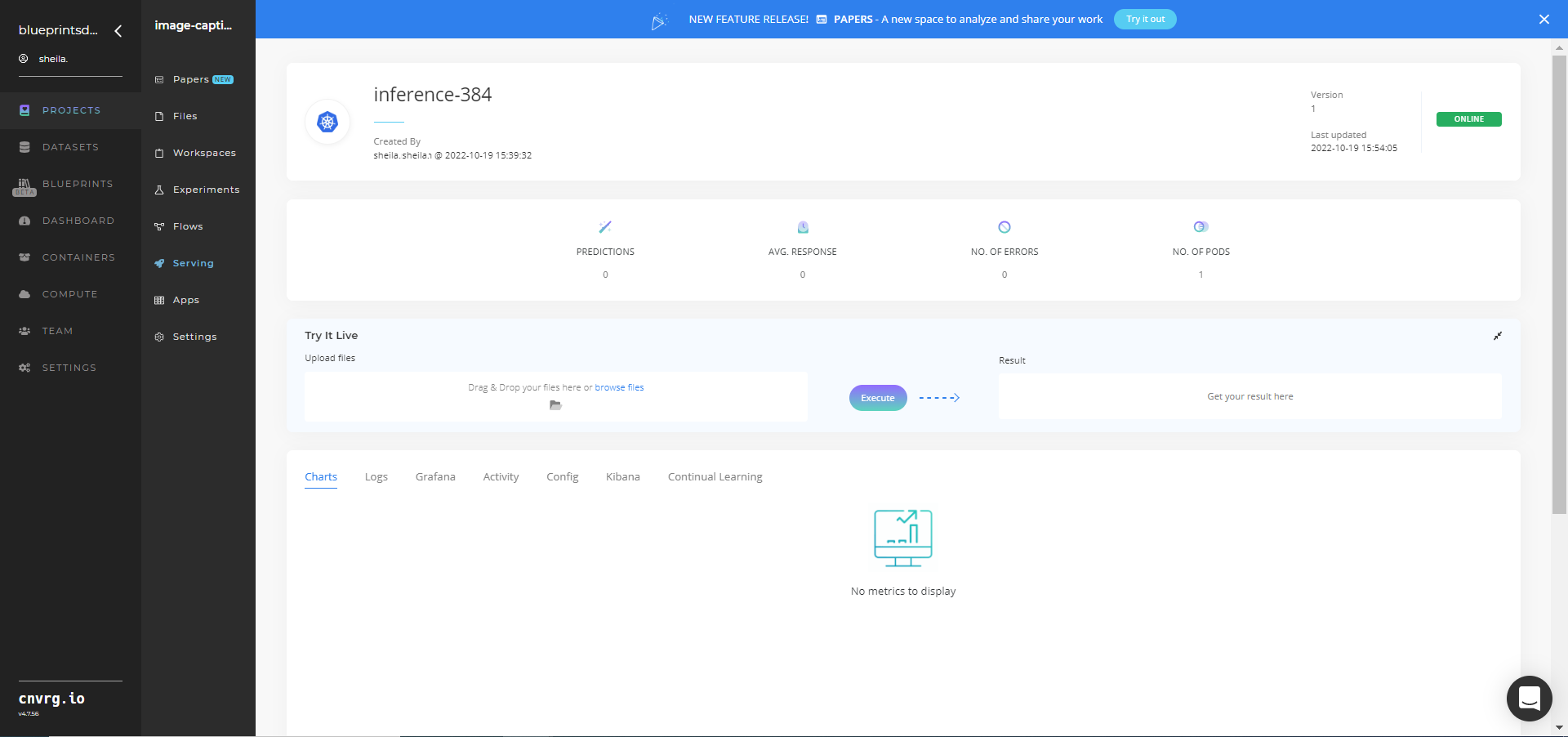

- In the dialog, select the relevant compute to deploy the API endpoint and click the Start button.

- The cnvrg software redirects to your endpoint. Complete one or both of the following options:

- Use the Try it Live section with any image to check your model.

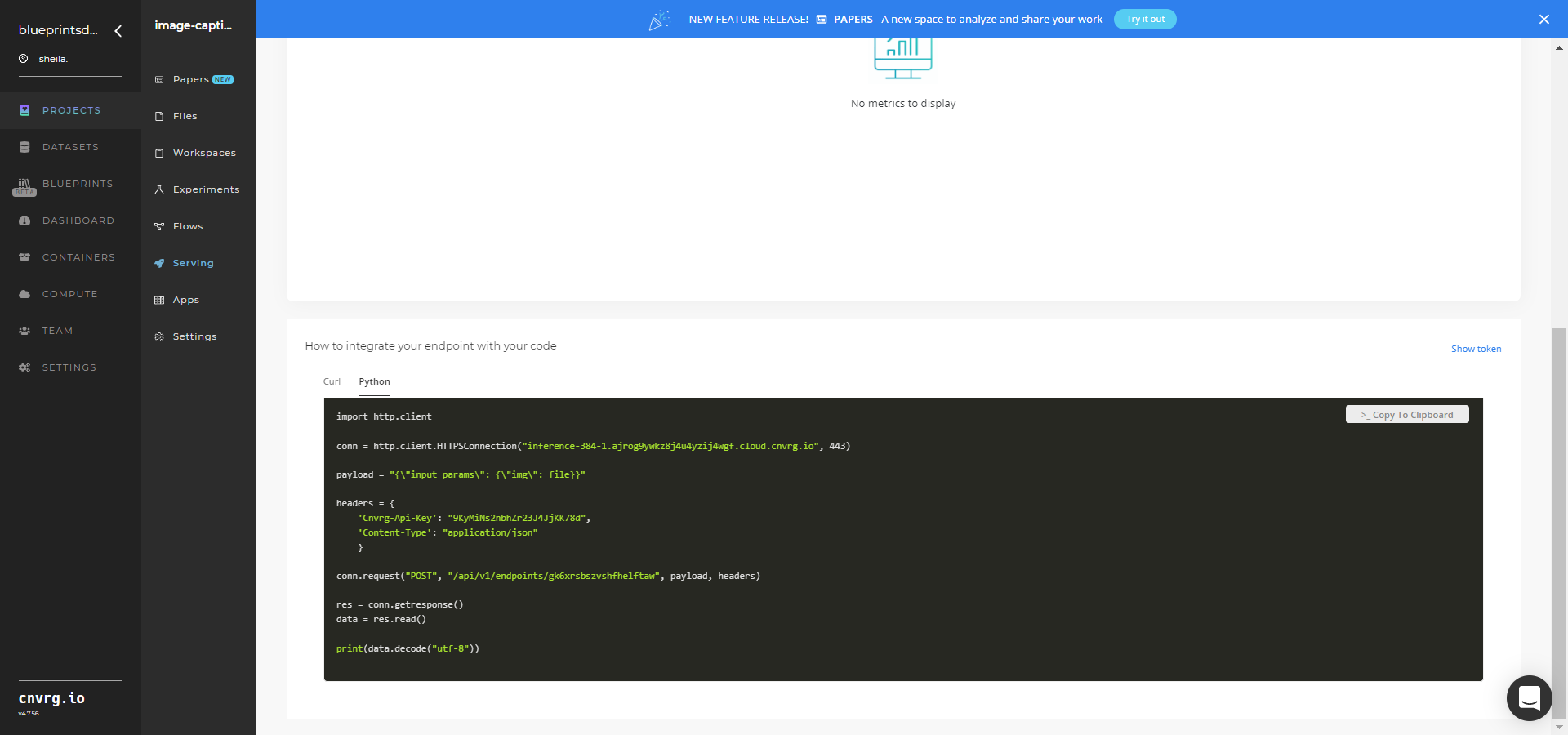

- Use the bottom integration panel to integrate your API with your code by copying in the code snippet.

- Use the Try it Live section with any image to check your model.

An API endpoint that generates textual captions for images has now been deployed. For information on this blueprint's software version and release details, click here.

# Related Blueprints

Refer to the following blueprint related to this inference blueprint:

- Image Captioning Training

- Scene Classification Train

- Scene Classification Inference

- Scene Classification Batch