# Object Detection AI Blueprint - deprecated from 11/2024

# Batch-Predict

Object detection refers to detecting instances of semantic objects of certain class in images and videos. The output from the object-detector model describes the class of an object and its coordinates, creating a box around the detected object and labeling it according to its class.

# Purpose

Use this batch blueprint to run in batch mode a pretrained tailored model with your custom data which detects object elements in images and videos stored in a directory. To run this blueprint, provide the S3 Connector path to a directory folder containing videos and images.

Click here to view under the title names the supported default object classes for detection. To detect custom objects, the path to the weights file is required. Run this counterpart’s training blueprint, and then upload the trained model weights to the S3 Connector.

This blueprint supports the following video and image formats:

| Videos | Images |

|---|---|

| mov | bmp |

| avi | jpg |

| mp4 | jpeg |

| mpg | png |

| mpeg | tif |

| m4v | tiff |

| wmv | dng |

| mkv | webp |

| mpo |

# Deep Dive

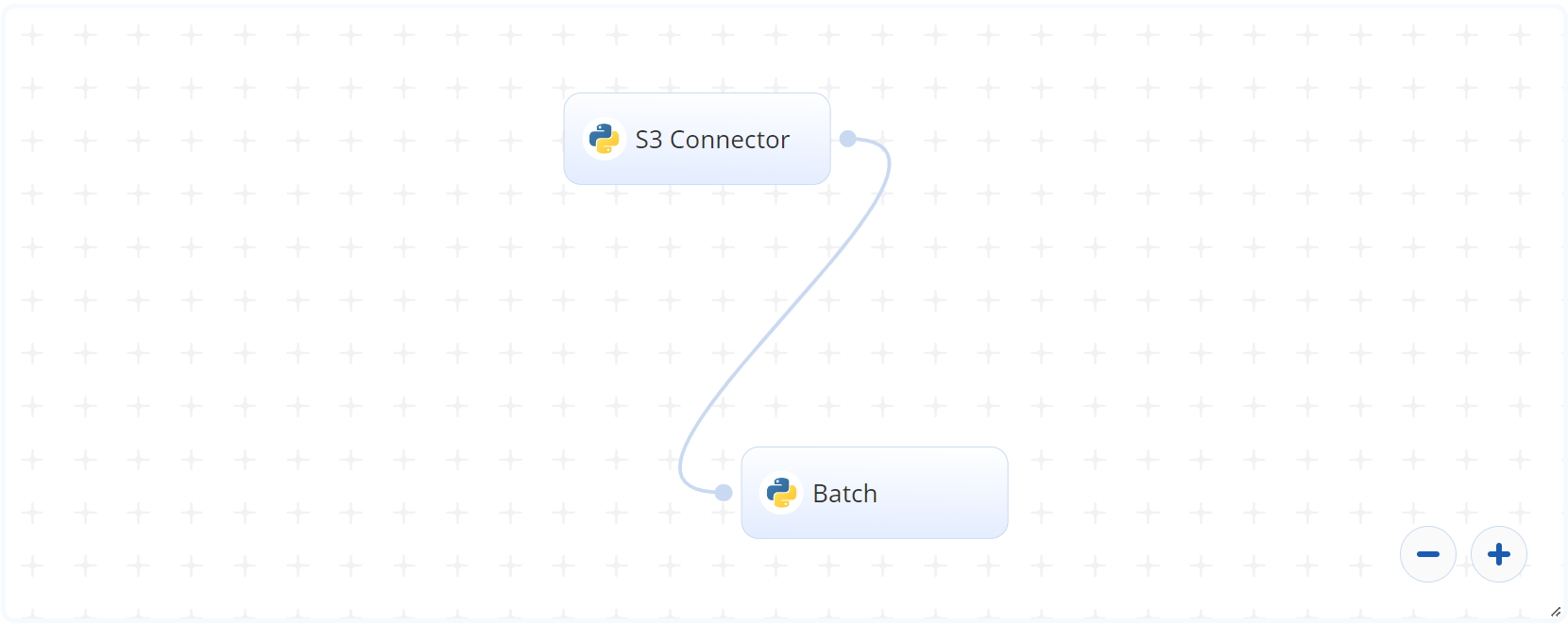

The following flow diagram illustrates this batch blueprint’s pipeline:

# Flow

The following list provides a high-level flow of this blueprint’s run:

- In the S3 Connector, the user provides the directory path where the object images/videos are stored.

- In the Batch task, the user provides the S3 location for

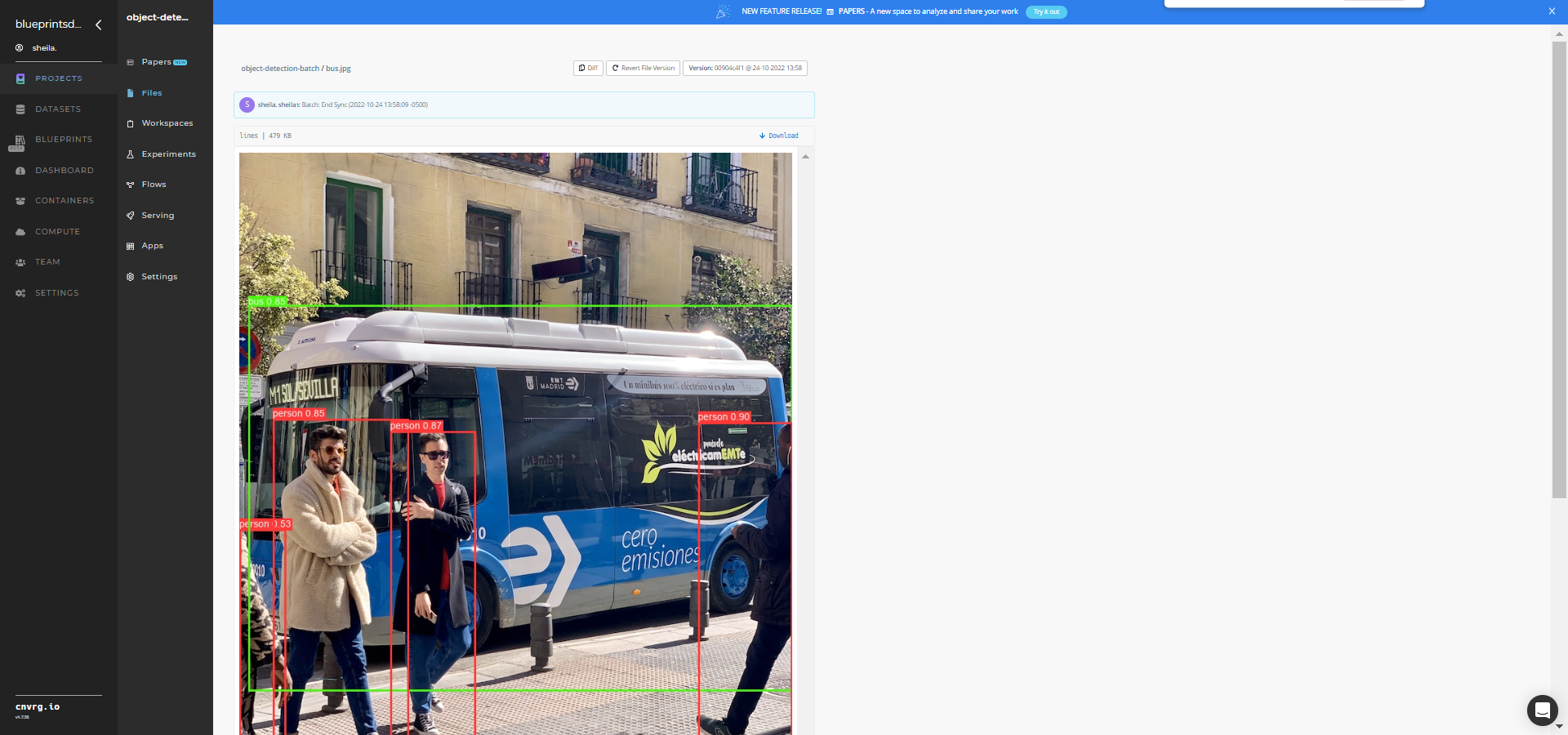

sourcewhich contains the stored images/videos. - The blueprint outputs same-named images/videos of the detected objects with their bounding boxes and object labels.

# Arguments/Artifacts

For more information on this blueprint’s tasks, its inputs, and outputs, click here.

# Inputs

--sourceis the path to the directory containing the images and videos to be processed.--weightsis the path to weights file in this argument. Provide this argument to use custom weights trained using this counterpart’s training blueprint. Otherwise, leave this argument empty to use the default weights.

# Outputs

The output artifacts consist of the video and image outputs. For every input video and image processed, a same-named output video and image is produced with the labeled bounding boxes drawn around the detected objects.

# Instructions

NOTE

The minimum resource recommendations to run this blueprint are 3.5 CPU and 8 GB RAM.

Complete the following steps to run this object-detector blueprint in batch mode:

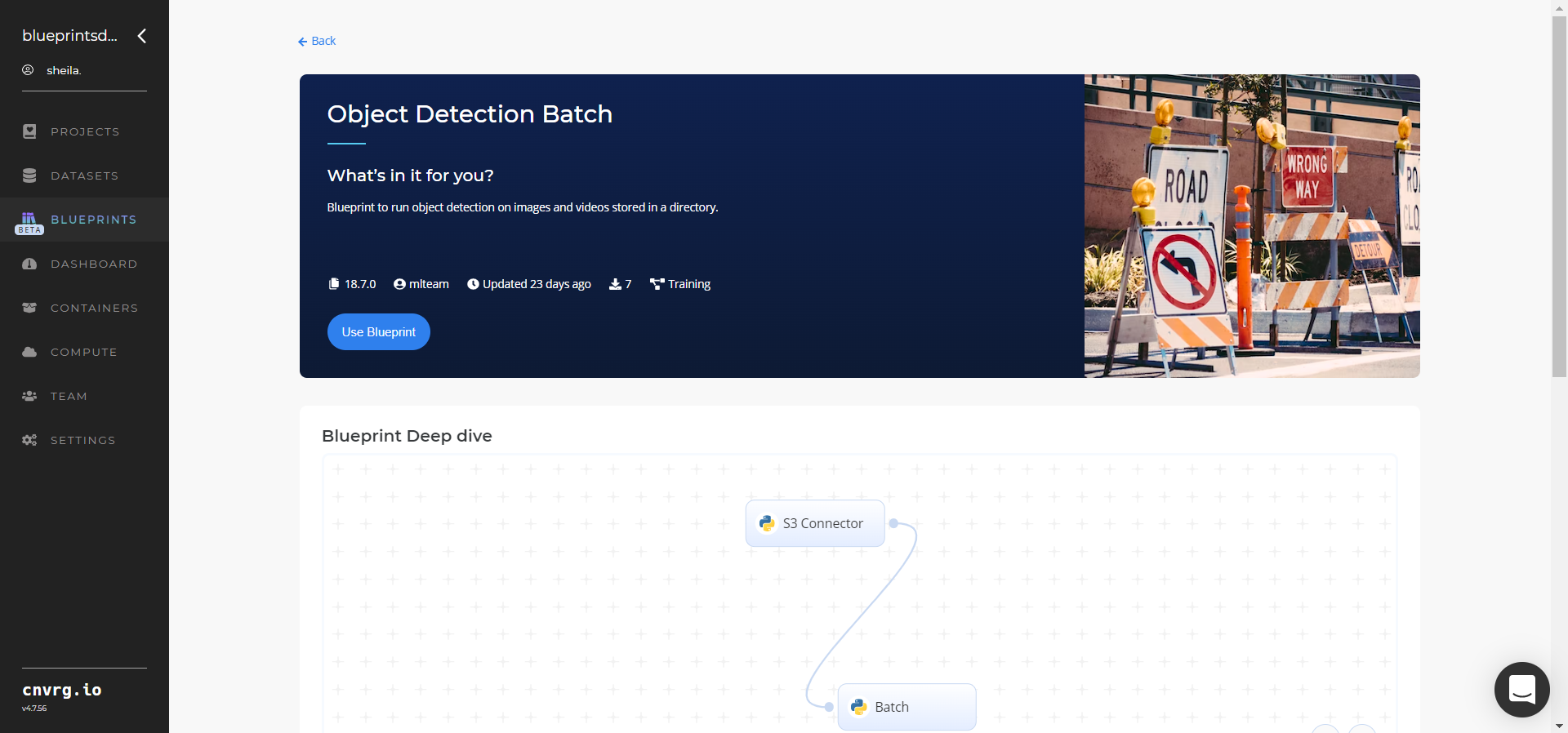

- Click the Use Blueprint button. The cnvrg Blueprint Flow page displays.

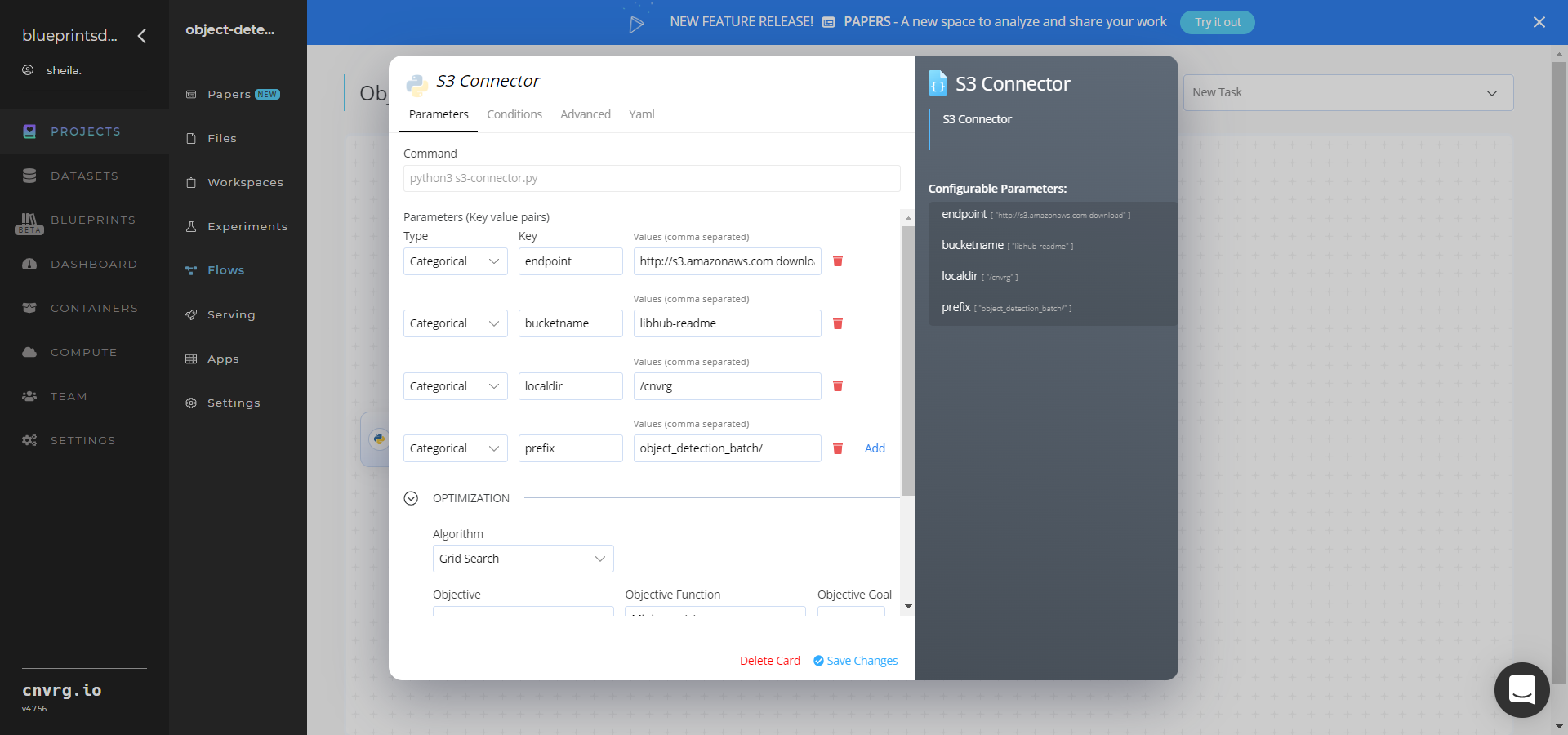

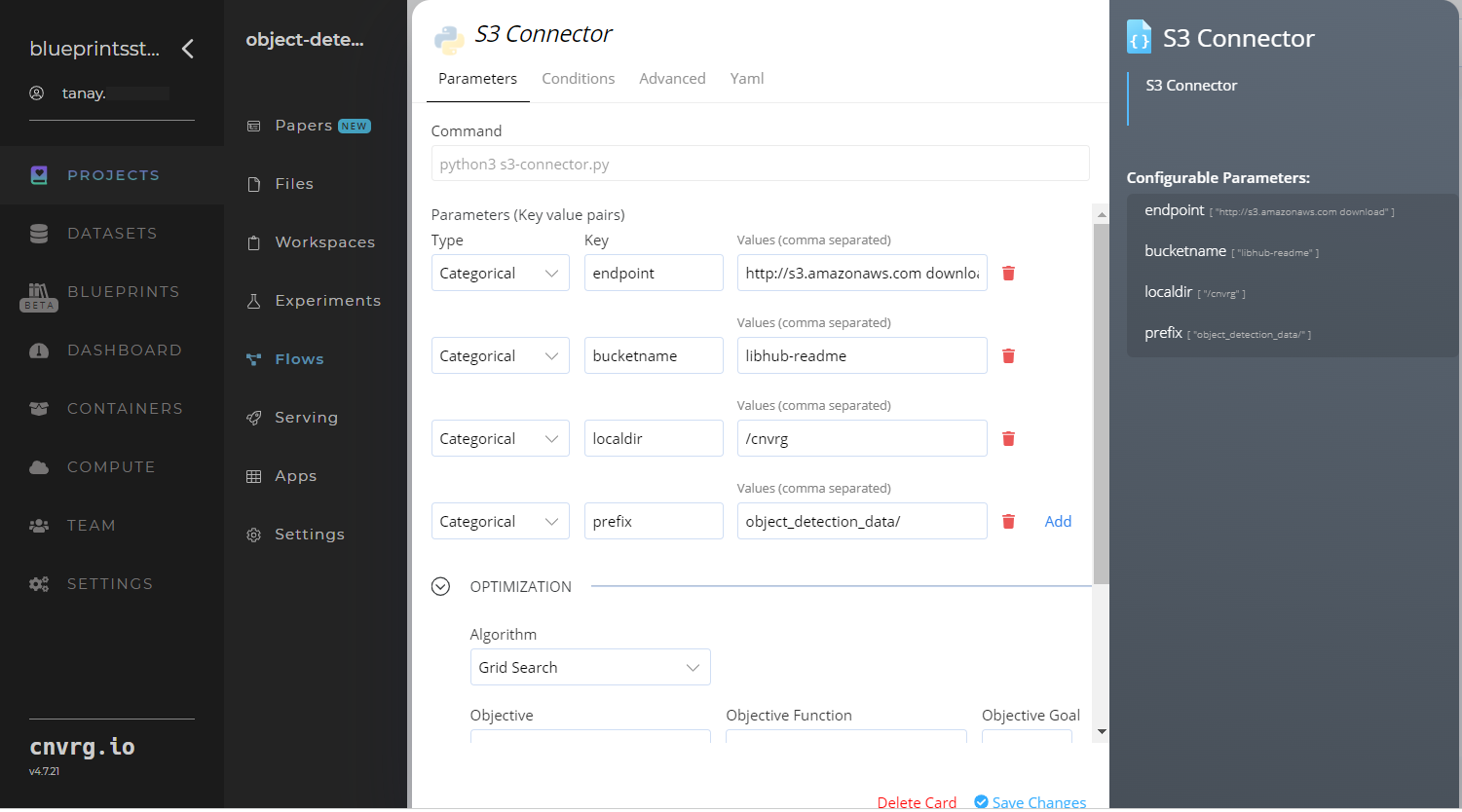

- Click the S3 Connector task to display its dialog.

- Within the Parameters tab, provide the following Key-Value pair information:

- Key:

bucketname− Value: provide the data bucket name - Key:

prefix– Value: provide the main path where the data folder is located

- Key:

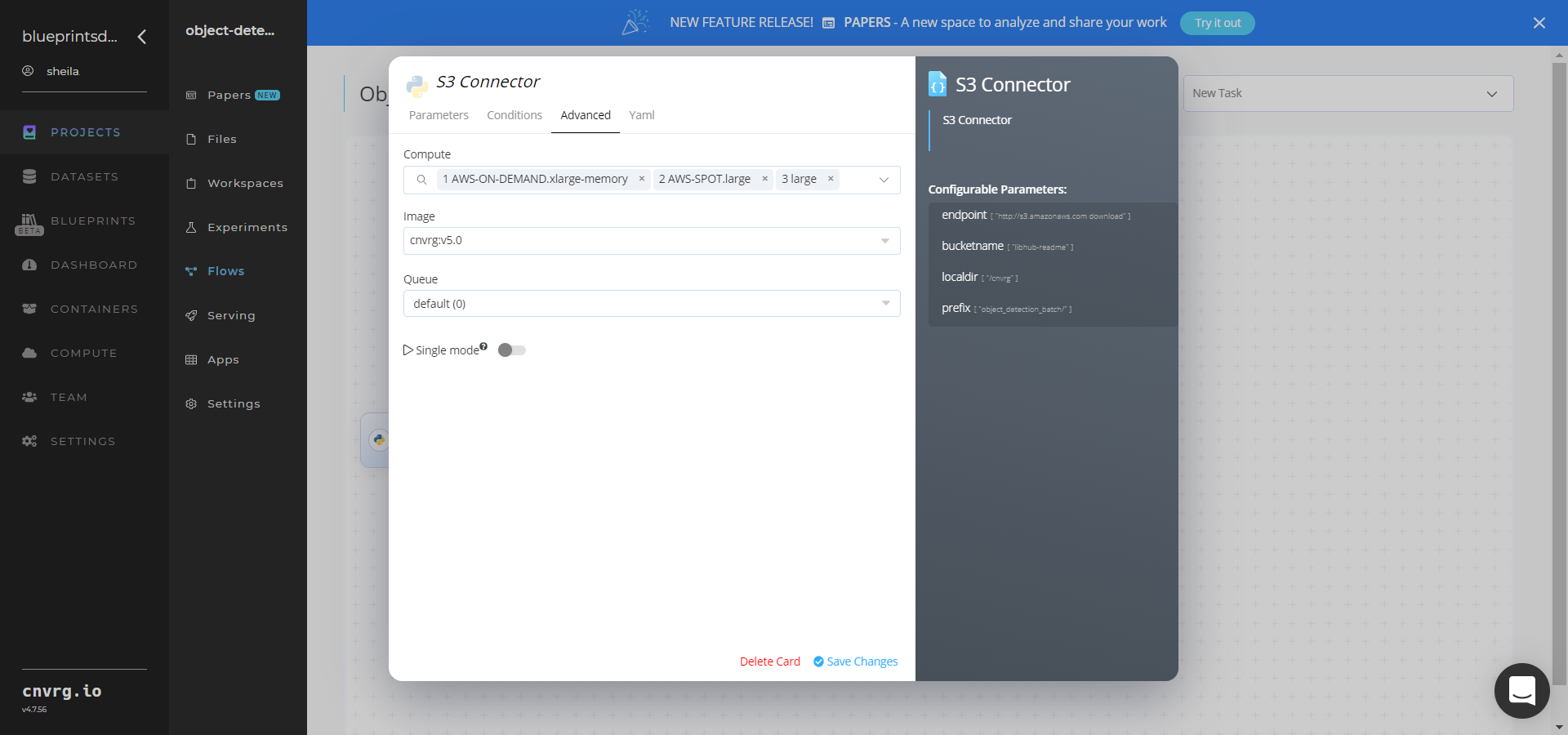

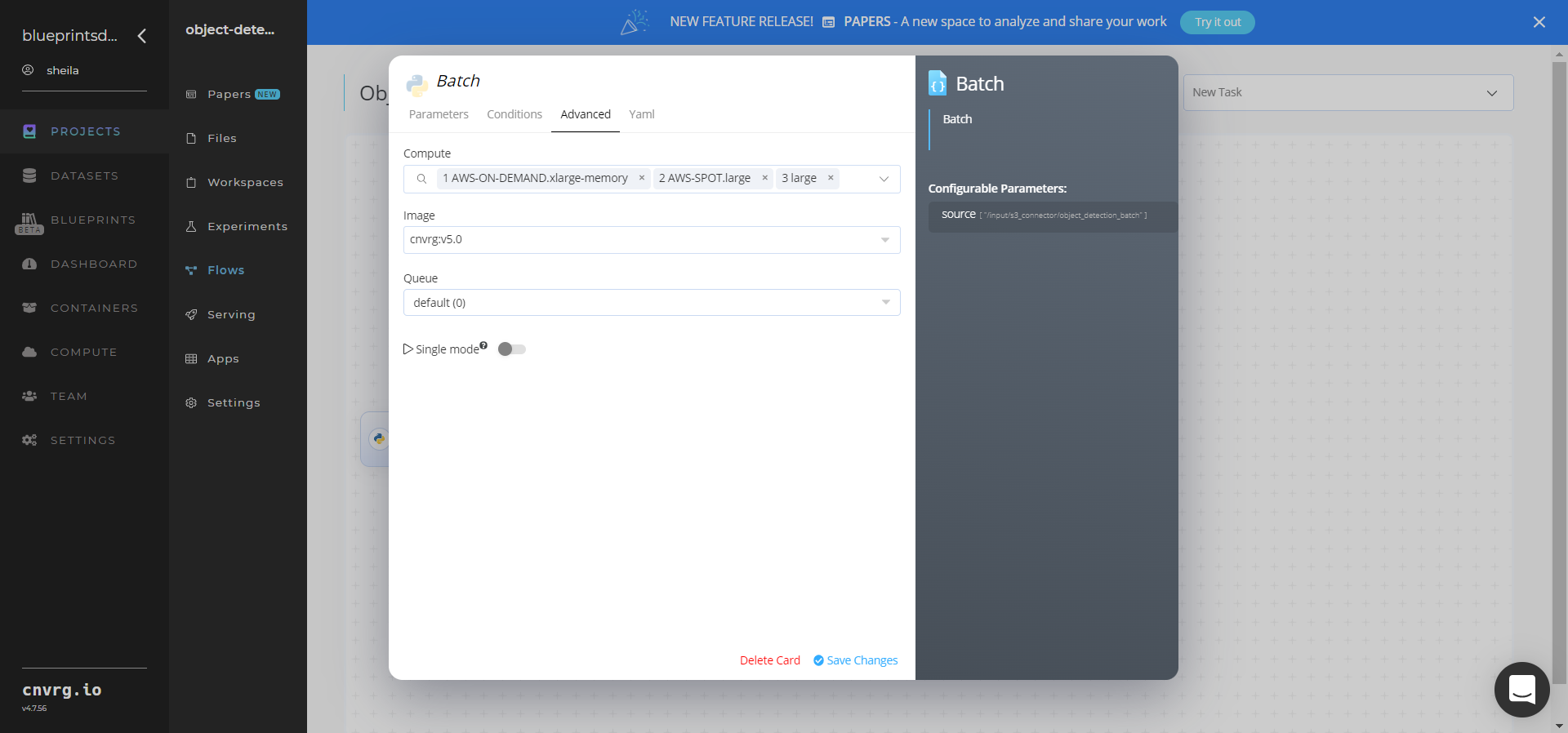

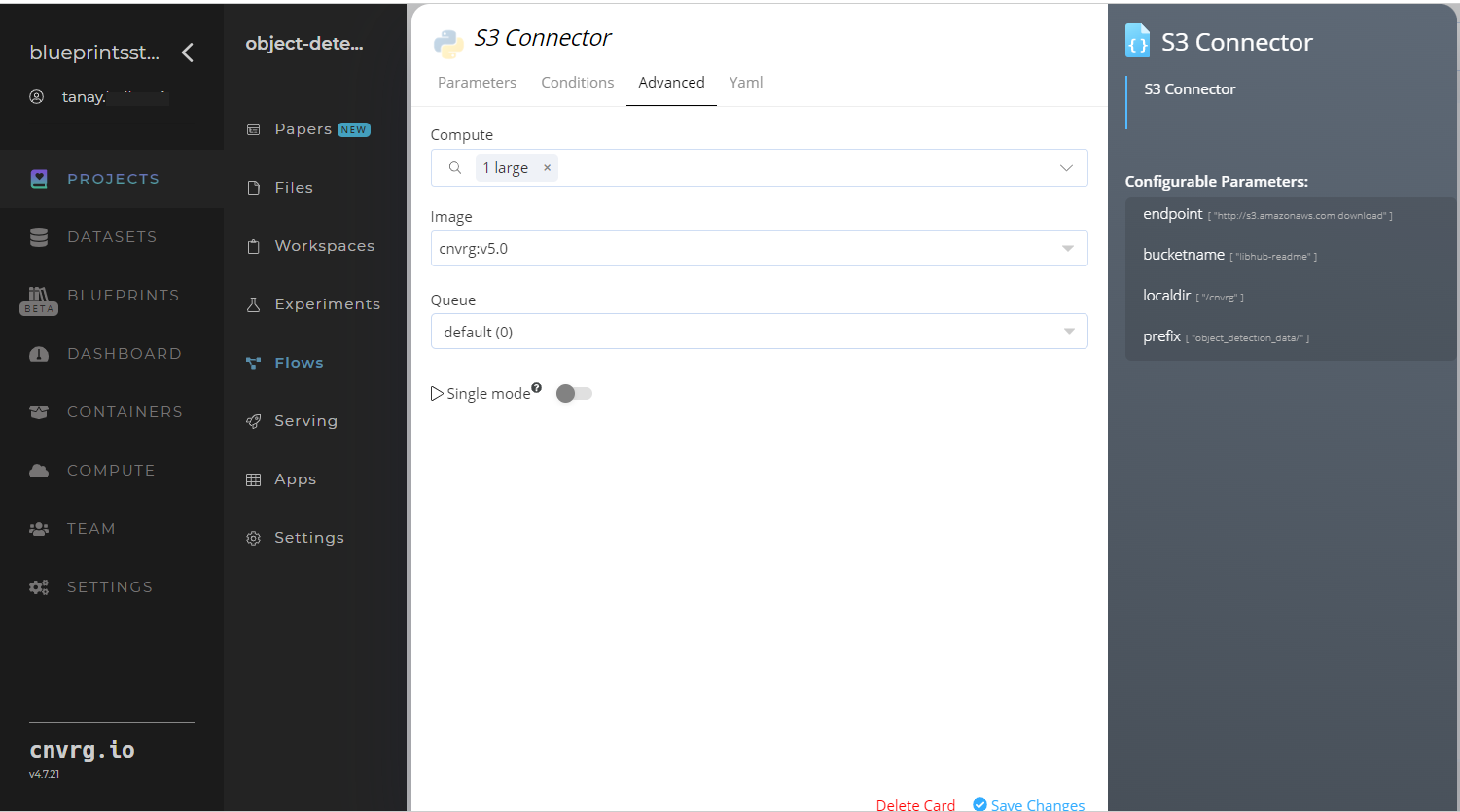

- Click the Advanced tab to change resources to run the blueprint, as required.

- Within the Parameters tab, provide the following Key-Value pair information:

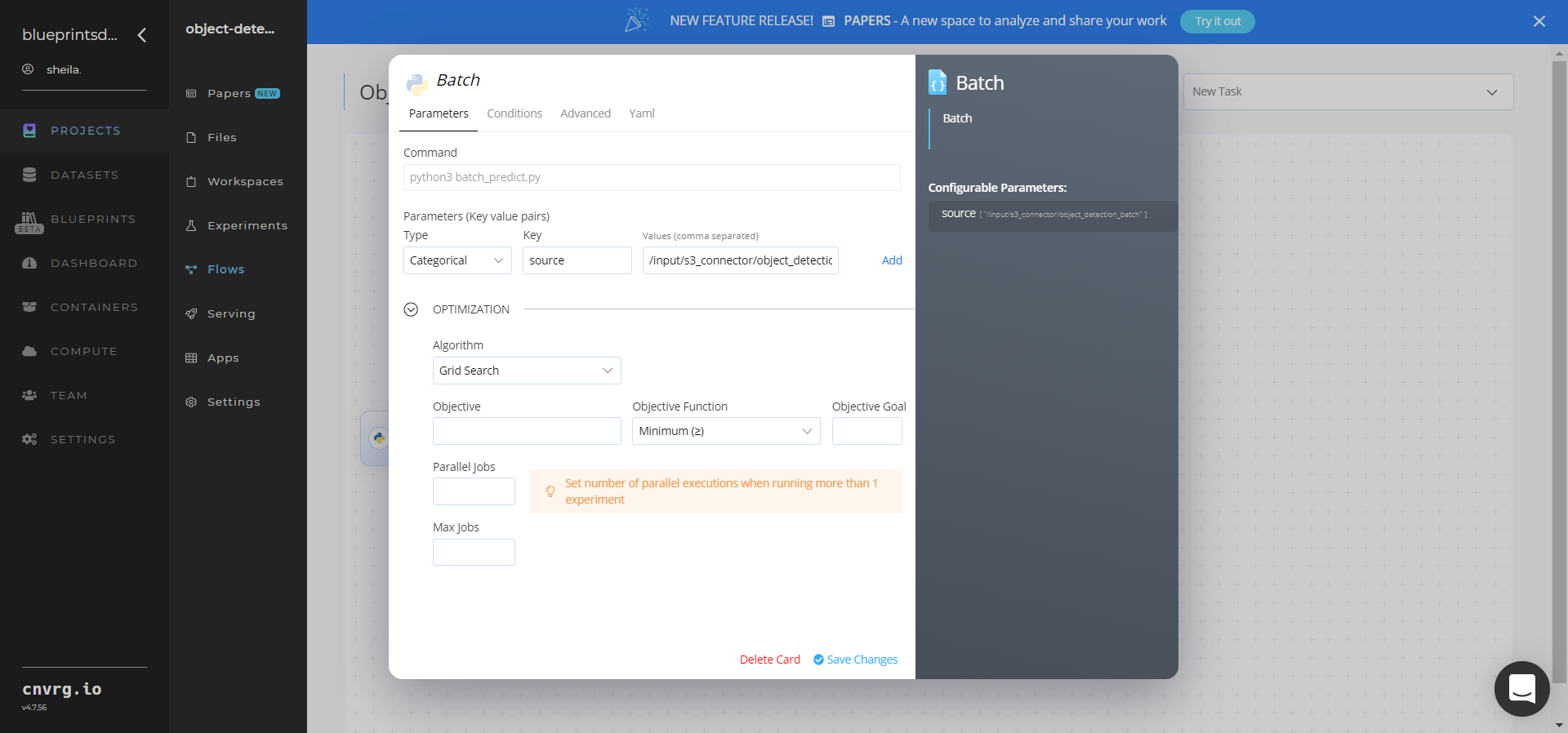

- Click the Batch task to display its dialog.

Within the Parameters tab, provide the following Key-Value pair information:

- Key:

source− Value: provide the S3 location containing all the images and videos /input/s3_connector/object_detection_batch− ensure the path adheres to this format

NOTE

You can use the prebuilt example data paths provided.

- Key:

Click the Advanced tab to change resources to run the blueprint, as required.

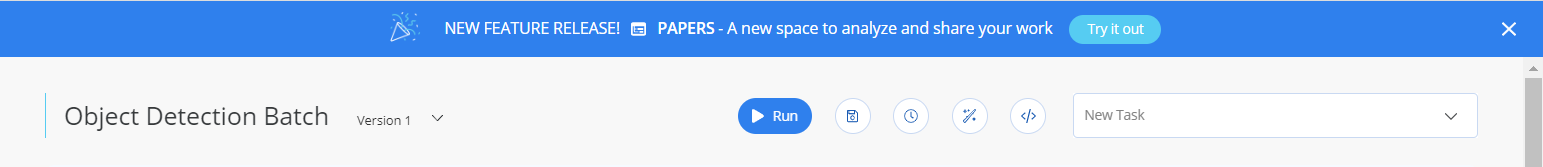

- Click the Run button.

The cnvrg software deploys an object-detector model that detects objects and their locations in a batch of images.

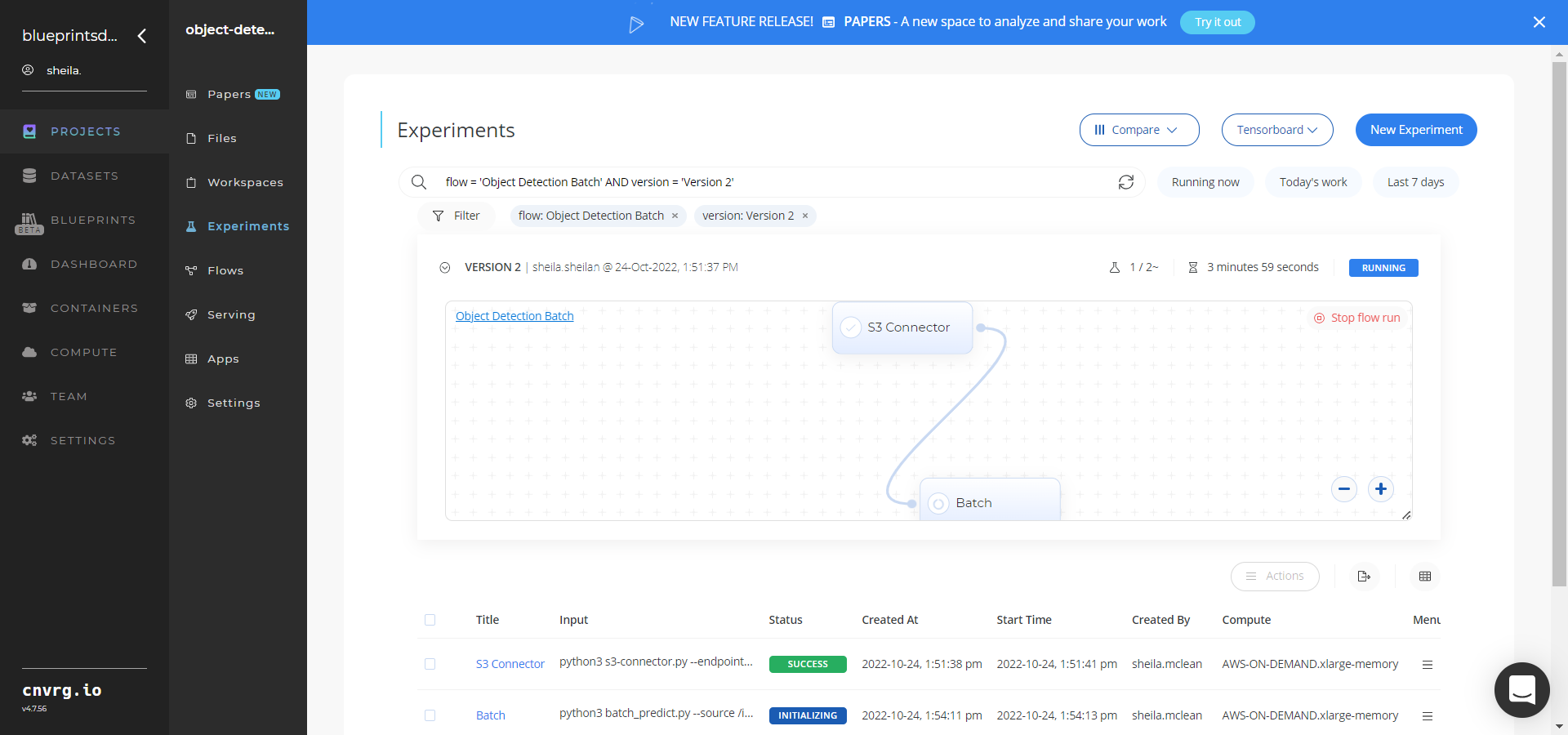

The cnvrg software deploys an object-detector model that detects objects and their locations in a batch of images. - Track the blueprint’s real-time progress in its Experiments page, which displays artifacts such as logs, metrics, hyperparameters, and algorithms.

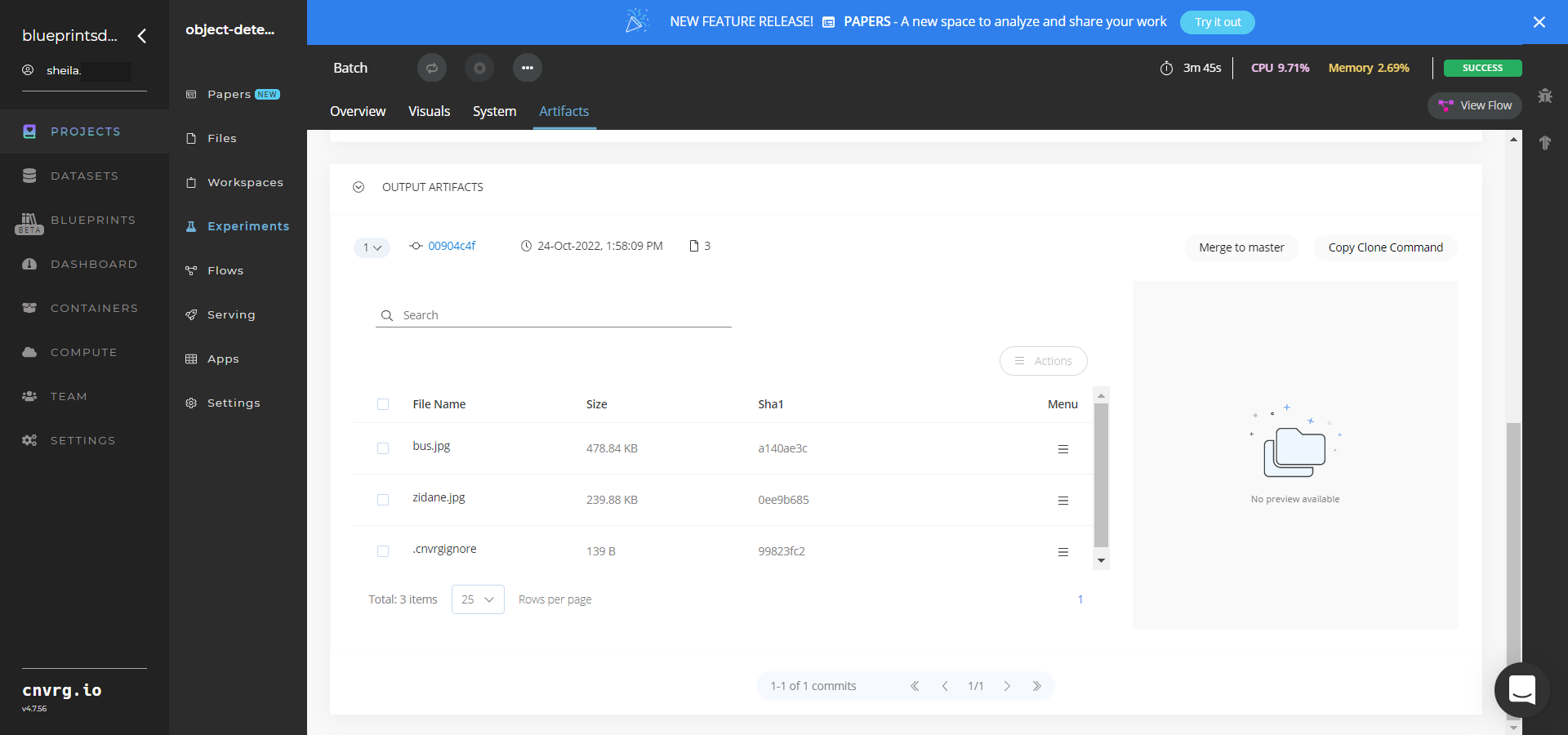

- Select Batch > Experiments > Artifacts and locate the output files.

- Select a File Name, click the Menu icon, and select Open File to view an output image or video file.

A tailored model that detects objects, draws their boundaries, and labels them in images and videos has now been deployed in batch mode. For information on this blueprint's software version and release details, click here.

# Connected Libraries

Refer to the following libraries connected to this blueprint:

# Related Blueprints

Refer to the following blueprints related to this batch blueprint:

- Object Detection Training

- Object Detection Infererence

- Scene Detection Batch

- Scene Detection Train

- Scene Detection Inference

# Inference

Object detection refers to detecting instances of certain class semantic objects in images. The output from the object-detector model describes the class of an object and its coordinates, creating a box around the detected object and labeling it according to the class.

# Purpose

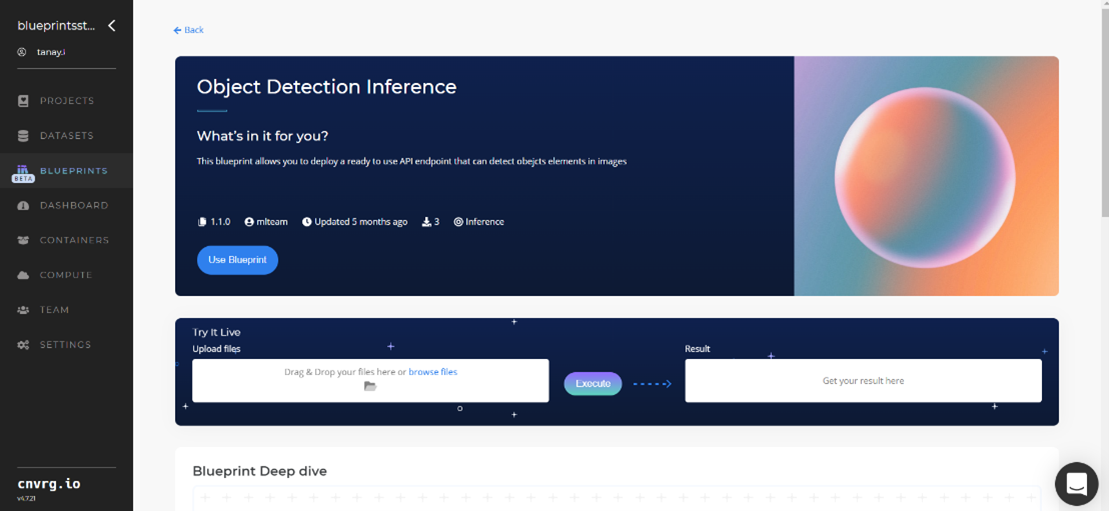

Use this inference blueprint to immediately detect object elements and their position in images. To use this pretrained object-detector model, create a ready-to-use API-endpoint that can be quickly integrated with your data and application.

This inference blueprint’s model was trained using Common Objects in Context (COCO) data. To use custom object data according to your specific business, run this counterpart's training blueprint, which trains the model and establishes an endpoint based on the newly trained model.

# Instructions

NOTE

The minimum resource recommendations to run this blueprint are 3.5 CPU and 8 GB RAM.

Complete the following steps to deploy this object-detector API endpoint:

- Click the Use Blueprint button. The cnvrg Blueprint Flow page displays.

- In the dialog, select the relevant compute to deploy API endpoint and click the Start button.

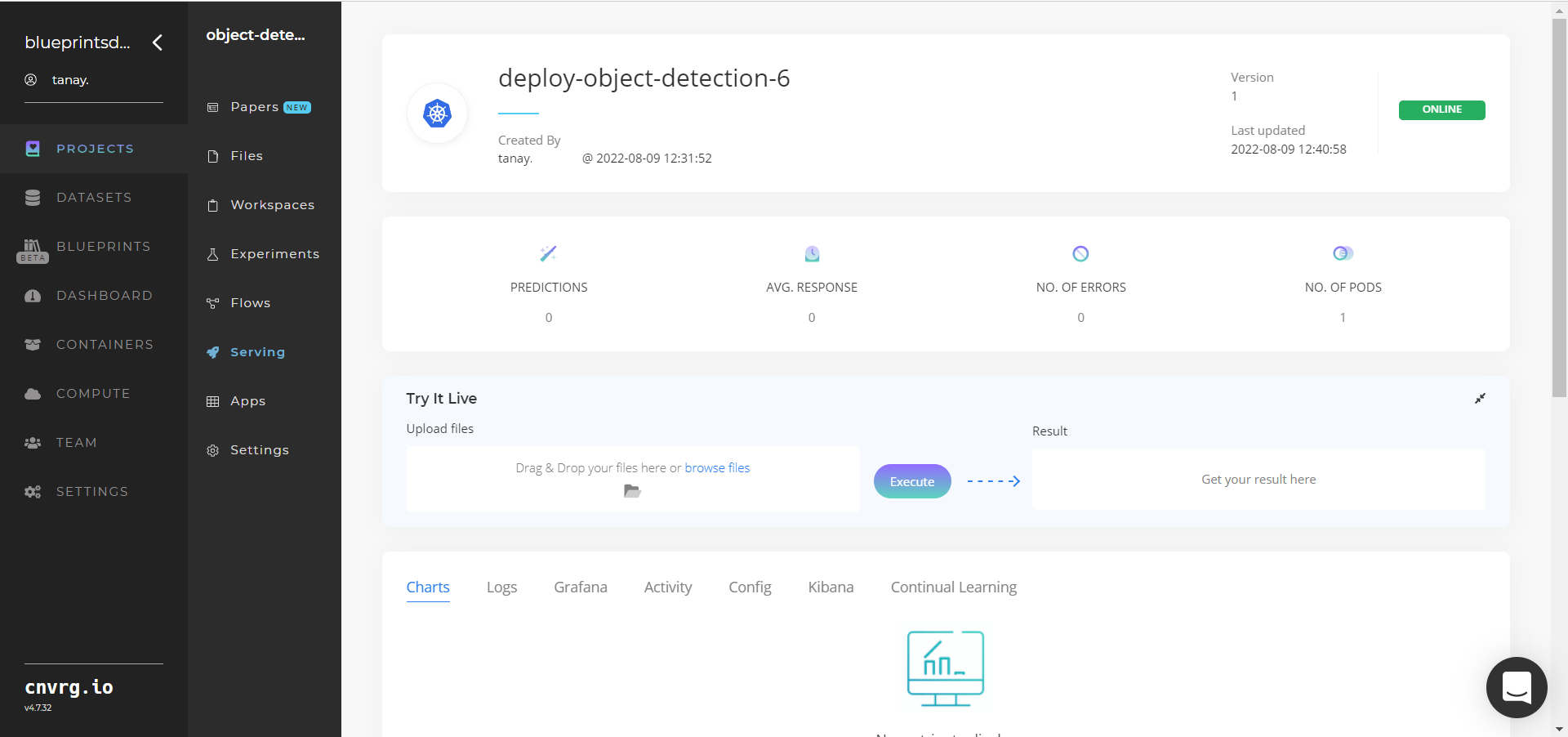

- The cnvrg software redirects to your endpoint. Complete one or both of the following options:

- Use the Try it Live section with any object-containing image to check the model.

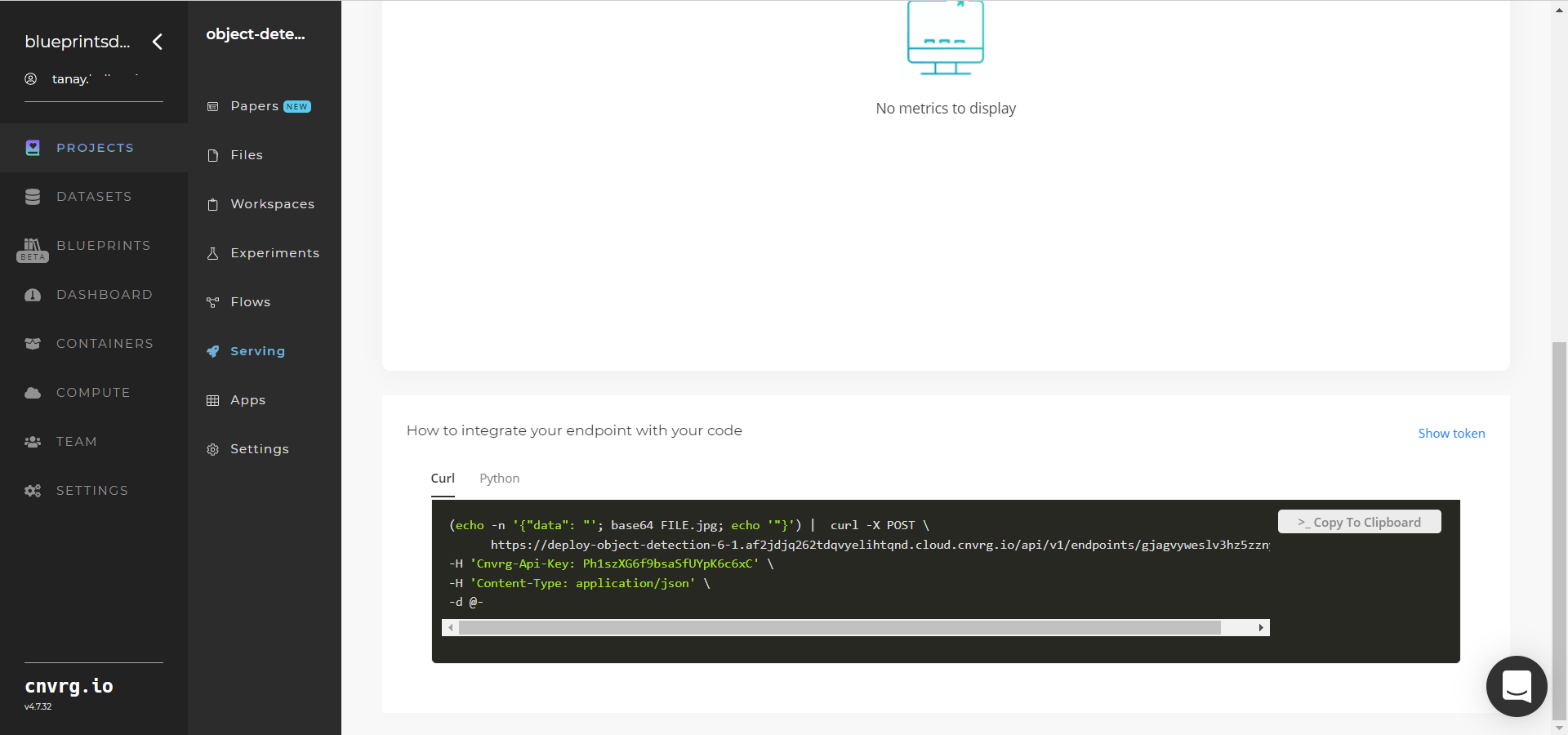

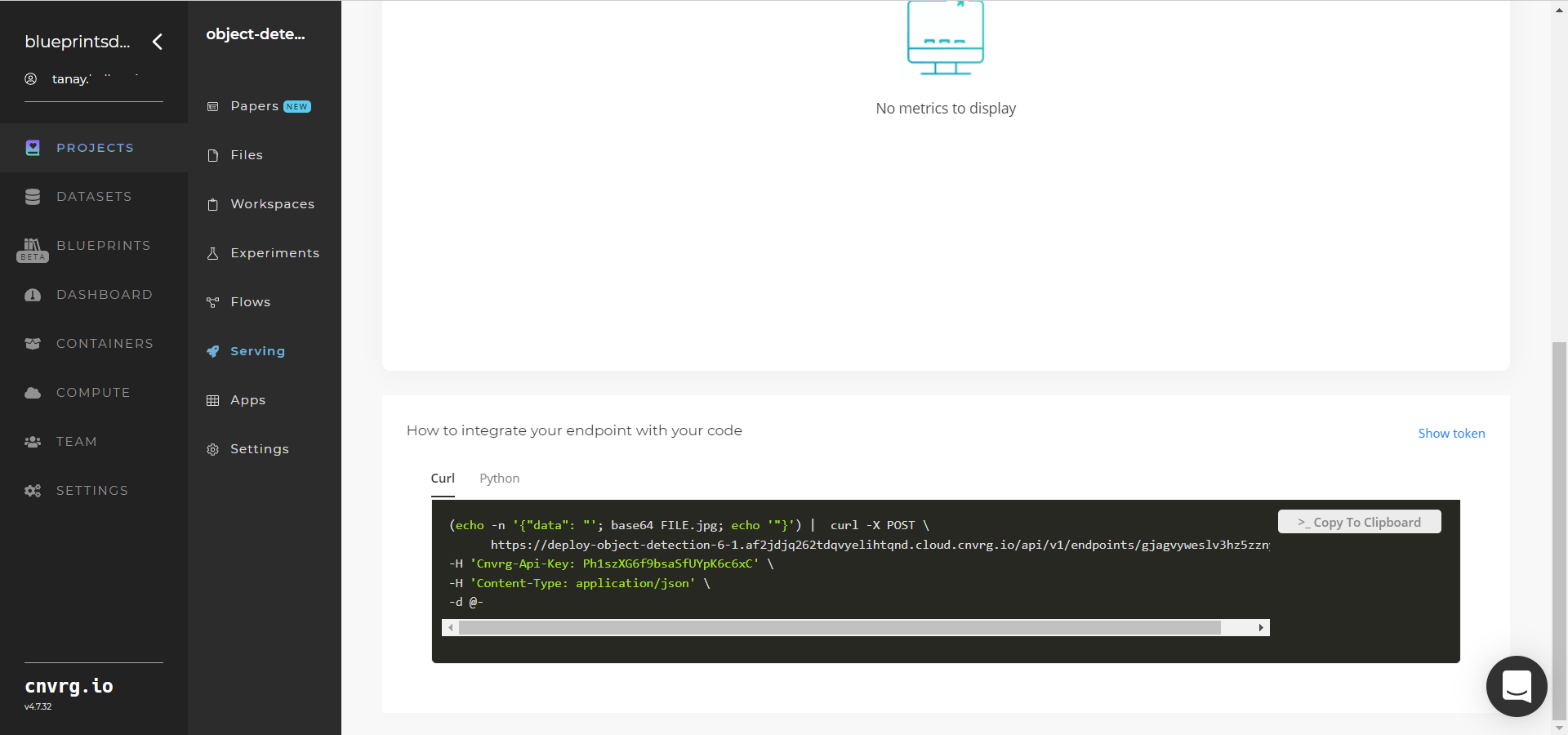

- Use the bottom integration panel to integrate your API with your code by copying in your code snippet.

- Use the Try it Live section with any object-containing image to check the model.

An API endpoint that detects objects in images has now been deployed. For information on this blueprint's software version and release details, click here.

# Related Blueprints

Refer to the following blueprints related to this inference blueprint:

- Object Detection Train

- Object Detection Batch

- Scene Detection Train

- Scene Detection Inference

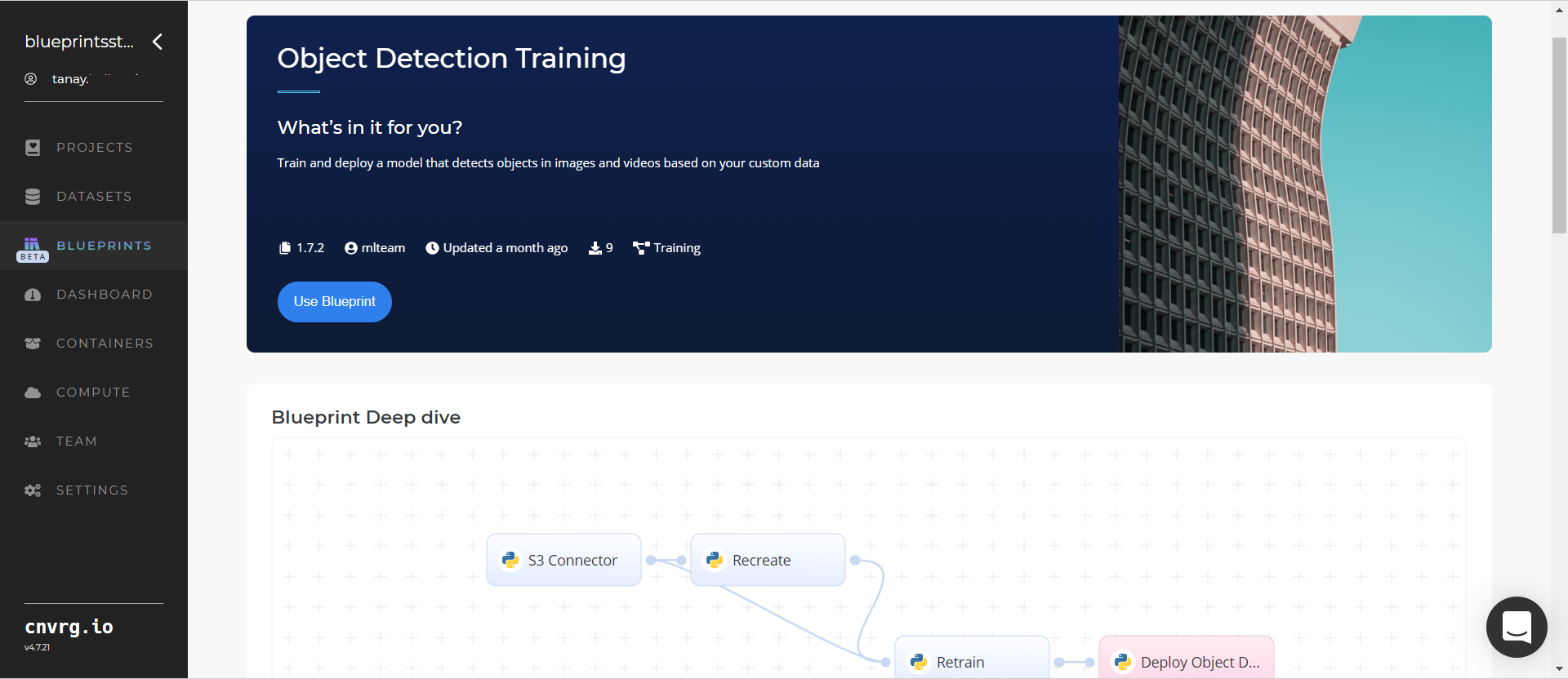

# Training

Object detection refers to detecting instances of semantic objects of certain class in images. The output from the object-detector model describes the class of an object and its coordinates, creating a box around the detected object and labeling it according to its class.

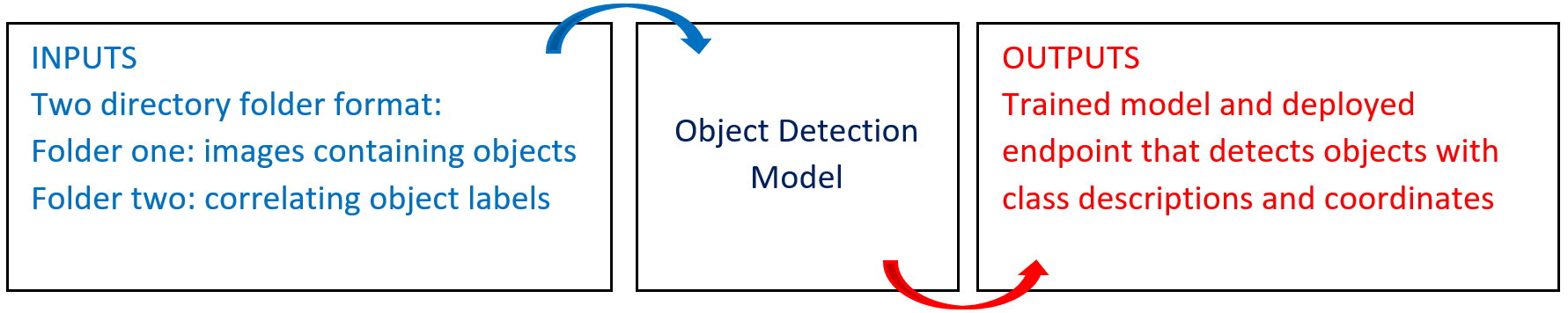

# Overview

The following diagram provides an overview of this blueprint's inputs and outputs.

# Purpose

Use this training blueprint with your custom data to train a tailored model that detects and labels objects in images. This blueprint also establishes an endpoint that can be used to detect objects in images based on the newly trained model. An object-detection algorithm requires data provided in the form of images, locations of the objects in those images, and the names (labels) of the object for training the algorithm.

To train this model with your data, provide the following two folders in the S3 Connector:

- Images − A folder with the object-containing images to train the model

- Labels − A folder with labels that correlate to the objects in the images folder

# Deep Dive

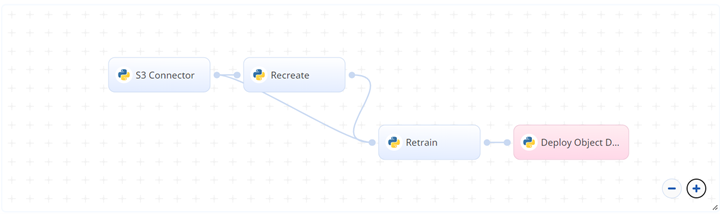

The following flow diagram illustrates this blueprint's pipeline:

# Flow

The following list provides a high-level flow of this blueprint's run:

- In S3 Connector, the user provides the data bucket name and two-directory training dataset path, with one folder containing the object images and another folder containing the correlating label files.

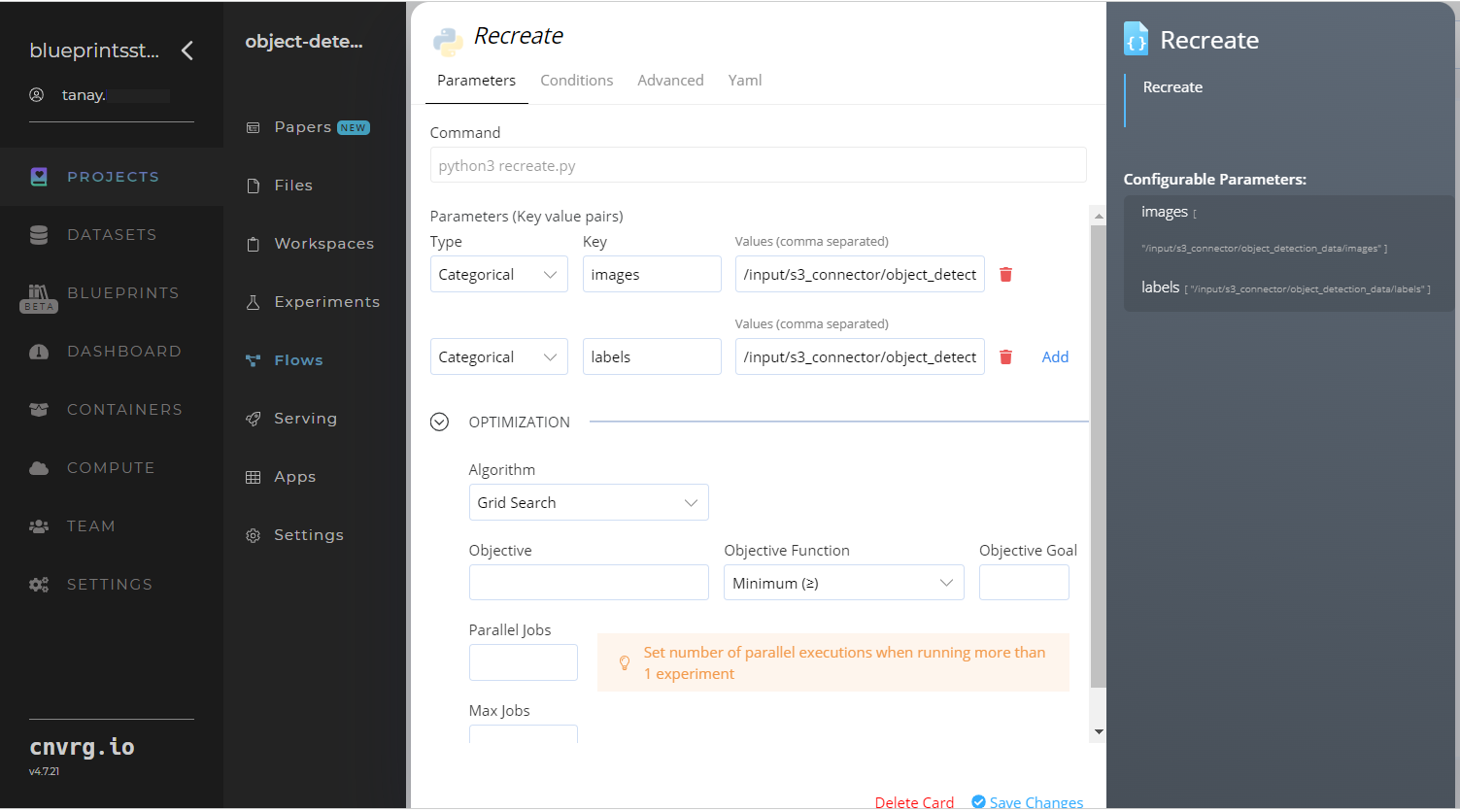

- In the Recreate task, the user provides the paths to the images and labels folders to split the datasets into train/validation/test.

- In the Retrain task, the user specifies the batch size, number of epochs, and the names of classes/categories of the objects for training. The class names represent the object categories to detect, such as person, dog, and cat. Provide the class names directly as an argument or specify the location of a CSV file containing the names under the classes column.

- The blueprint trains the tailored model using user-provided custom data to detect objects in images.

- The user uses the newly deployed endpoint to make predictions with new data using the newly trained model.

# Arguments/Artifacts

For more information and examples of this blueprint's tasks, its inputs, and outputs, click here.

# Recreate Inputs

--imagesis the path to the folder with the input images containing the objects for the detector to learn to detect.--labelsis the path to the folder with the label files containing the coordinates of the objects in each image along with the class of each object.

# Recreate Output

- A dataset split into train/validation/test datasets. The images and labels folders each include three folders containing the images and labels with names train, test, and validation.

# Retrain Inputs

--batchis the batch size the neural network ingests before calculating loss and readjusting weights. For example, for a two batch size and 100 images, the neural network inputs two training images at a time, calculates loss and readjusts weights, and continues for all the training set's images.--epochsis the number of times the neural network trains dataset to complete its learning. The more the better, but too many can lead to overfitting and too few can lead to underfitting.--class_namesets the names of the classes for the model to learn. Class naming is important, or the trained model prints just numbers (like 0,1,2) for detected objects, so these numbers must be mapped to meaningful names. As input, provide double-quoted class names separated by commas (like "Person,Cat,Dog") or specify a one-column CSV file location such as\dataset_name\input_file.NOTE

The order of the names must be same as the items in the labels files. For example, if the file's labels 0,1,2 are Person,Cat,Dog respectively, then this parameter's input must be in the same "Person,Cat,Dog" order and the same order preserved in the CSV file.

# Retrain Outputs

best.ptis the file containing the retrained neural network weights yielding the highest scores. Download and save these weights to make future inferences on images containing trained objects.last.ptis the file containing the retrained neural network weights from the last epoch. Though these weights can also be used to make future object detection inferences, the cnvrg team doesn't recommend using them because they perform worse than the best weights described above.Trainresults.csvis the file containing all metrics evaluated on the training set.Valresults.csvis the file containing all metrics evaluated on the validation dataset. Both previous metric CSV files have the following column/row format:- Images Overall column contains the total training images number, Labels column contains the total number of labels available per class, and mAp@.5 and mAp@.5:.95 columns refer to the respective mean-average precisions.

- The All row contains the average of evaluation metrics from all classes.

others filesare other output artifacts includingresults.csvfile containing evaluations done every iteration; results.png containing plots namely, losses and evaluation metrics, a confusion matrix, precision-per-class, recall-per-class, F1 score-per-class, precision vs. recall, label distribution, and bounding box distribution; two YAML files containing hyperparameters and training settings; and three images each containing examples of validation data, training data, and predictions.

# Instructions

NOTE

The minimum resource recommendations to run this blueprint are 3.5 CPU and 8 GB RAM.

Complete the following steps to train this object-detector model:

- Click the Use Blueprint button. The cnvrg Blueprint Flow page displays.

- In the flow, click the S3 Connector task to display its dialog.

- Within the Parameters tab, provide the following Key-Value pair information:

- Key:

bucketname- Value: enter the data bucket name - Key:

prefix- Value: provide the main path to the data folder

- Key:

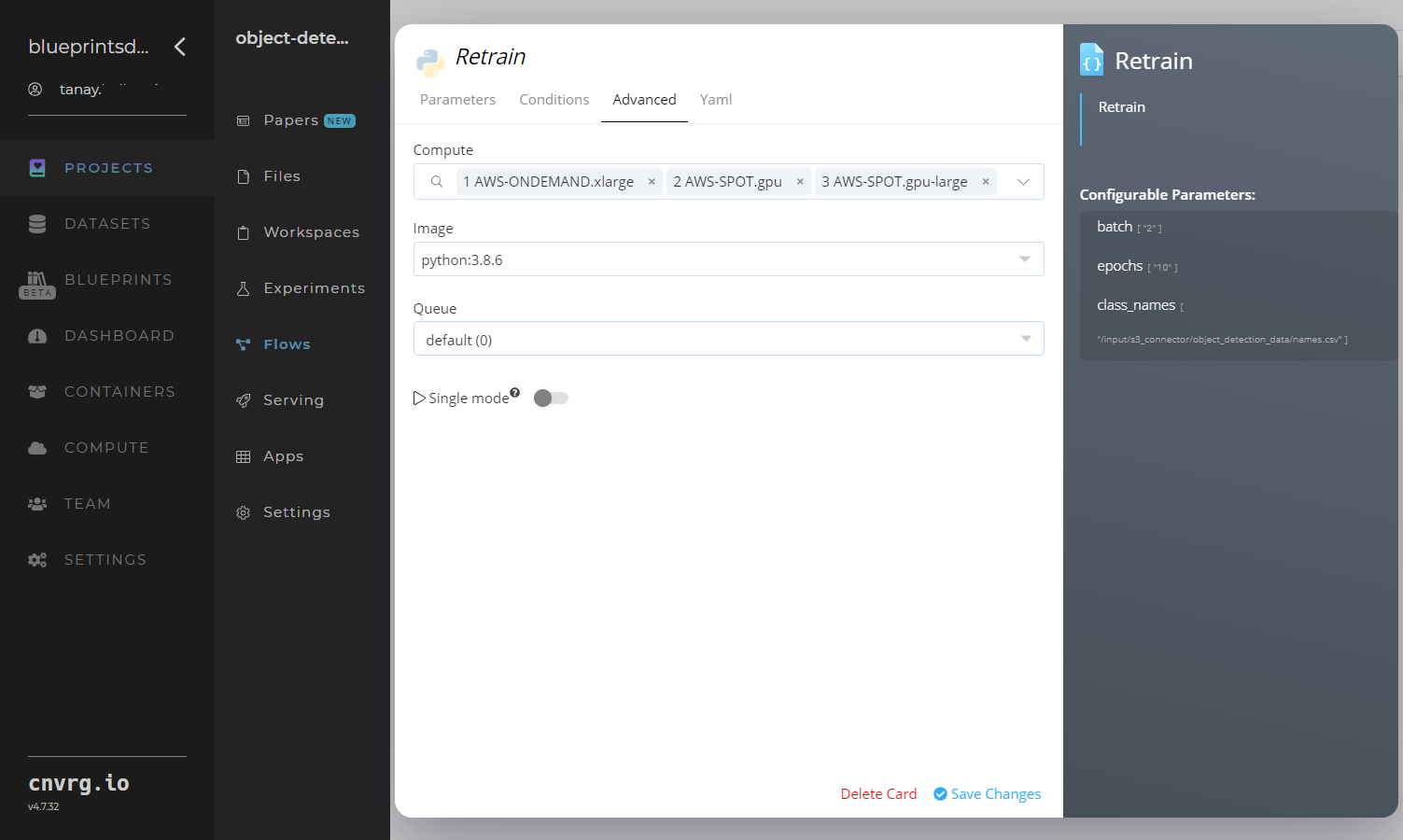

- Click the Advanced tab to change resources to run the blueprint, as required.

- Within the Parameters tab, provide the following Key-Value pair information:

- Return to the flow and click the Recreate task to display its dialog.

Within the Parameters tab, provide the following Key-Value pair information:

- Key:

images– Value: provide the path to the images including the S3 prefix, with the following format:/input/s3_connector/<prefix>/images; see Recreate Inputs - Key:

labels– Value: provide the path to the labels including the S3 prefix, with the following format:/input/s3_connector/<prefix>/labels; see Recreate Inputs

NOTE

You can use prebuilt example data paths provided.

- Key:

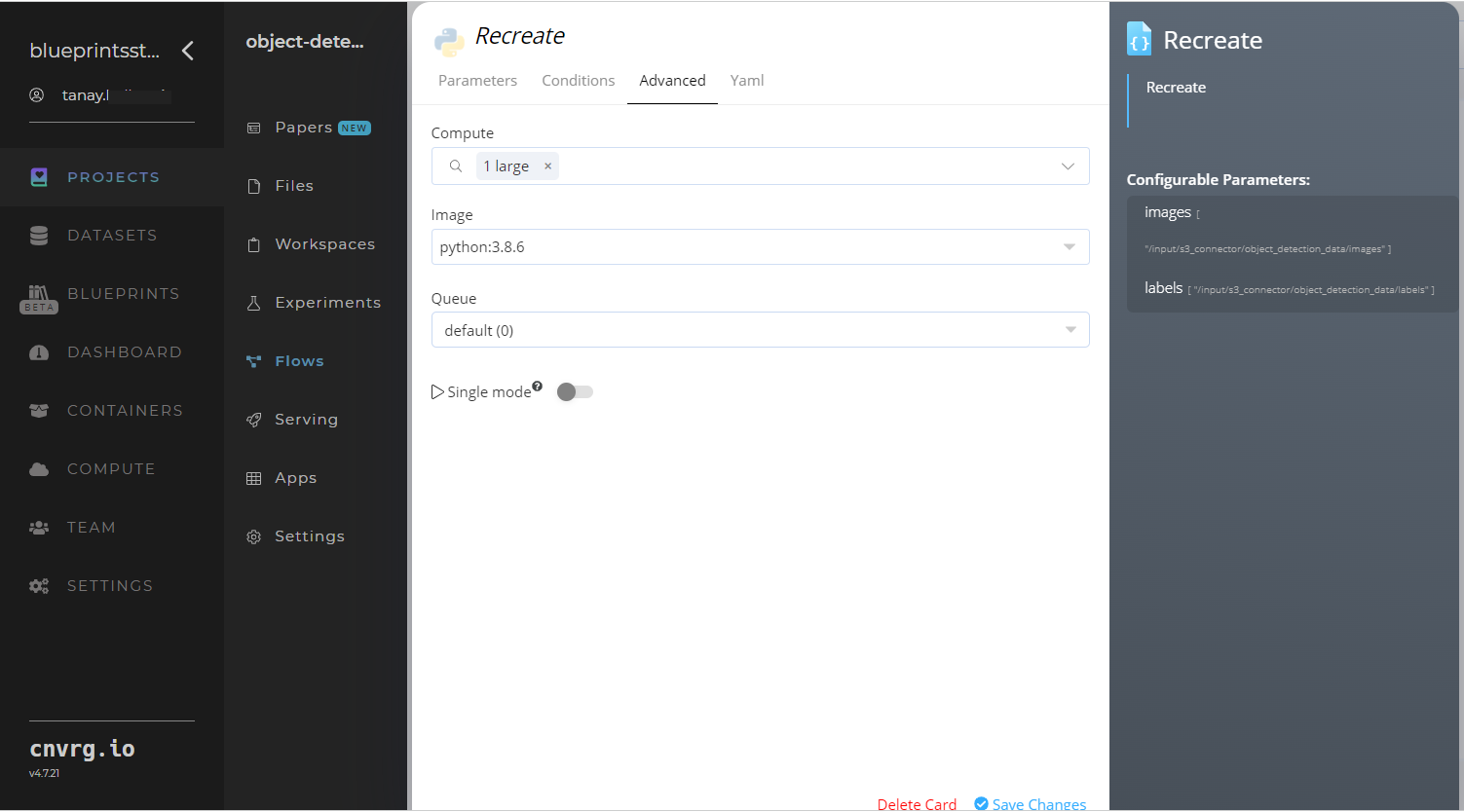

Click the Advanced tab to change resources to run the blueprint, as required.

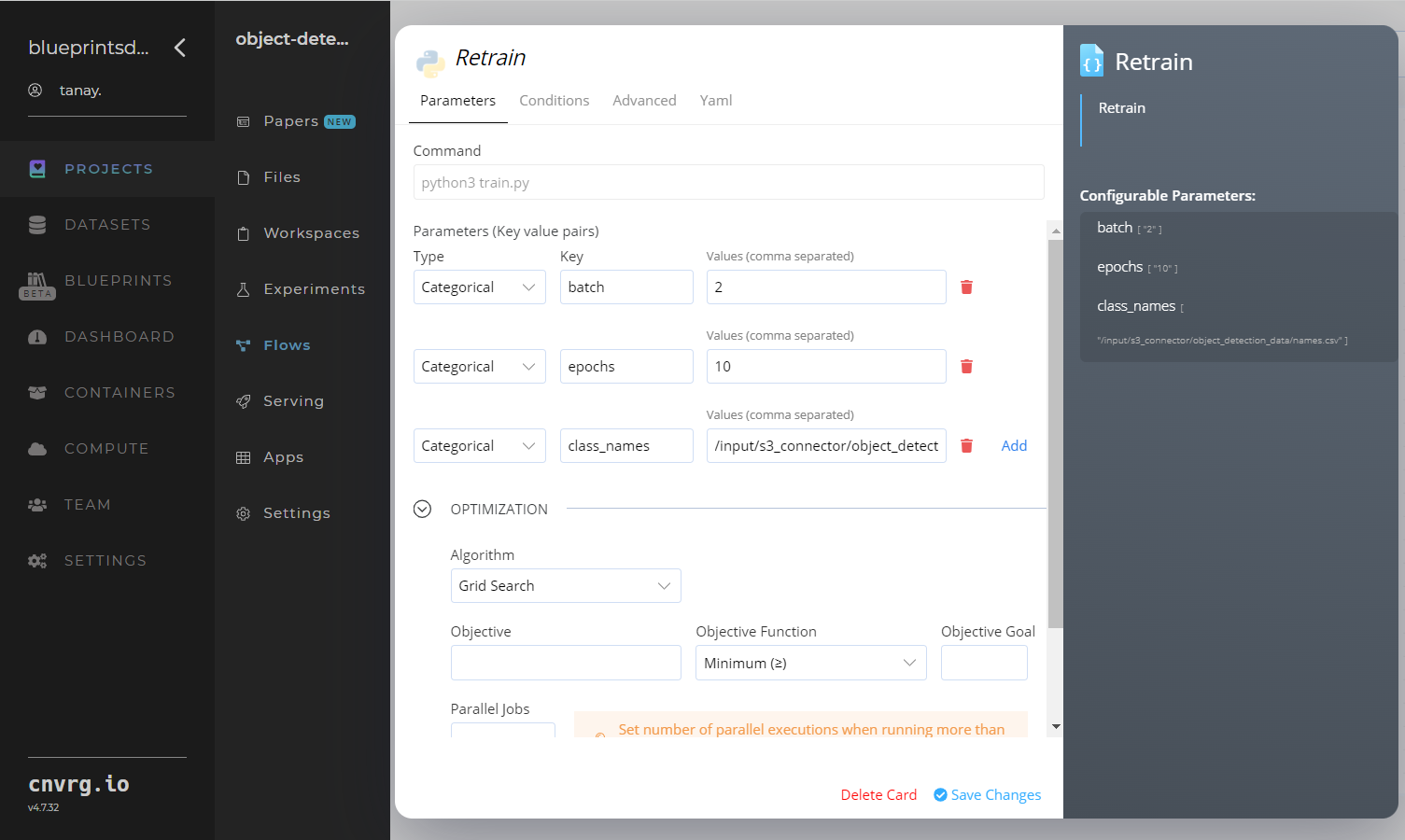

- Click the Retrain task to display its dialog.

- Within the Parameters tab, provide the following Key-Value pair information:

- Key:

batch– Value: set the batch size the neural network ingests before calculating loss and readjusting weights; see Retrain Inputs - Key:

epochs– Value: set the number of times the neural network trains the dataset; see Retrain Inputs - Key:

class_names– Value: provide the names of the classes for the model to learn; see Retrain Inputs

- Key:

- Click the Advanced tab to change resources to run the blueprint, as required.

- Within the Parameters tab, provide the following Key-Value pair information:

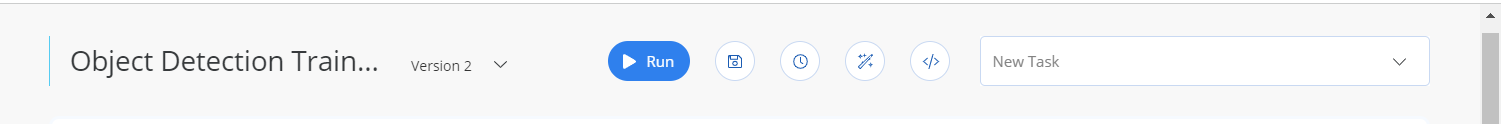

- Click the Run button.

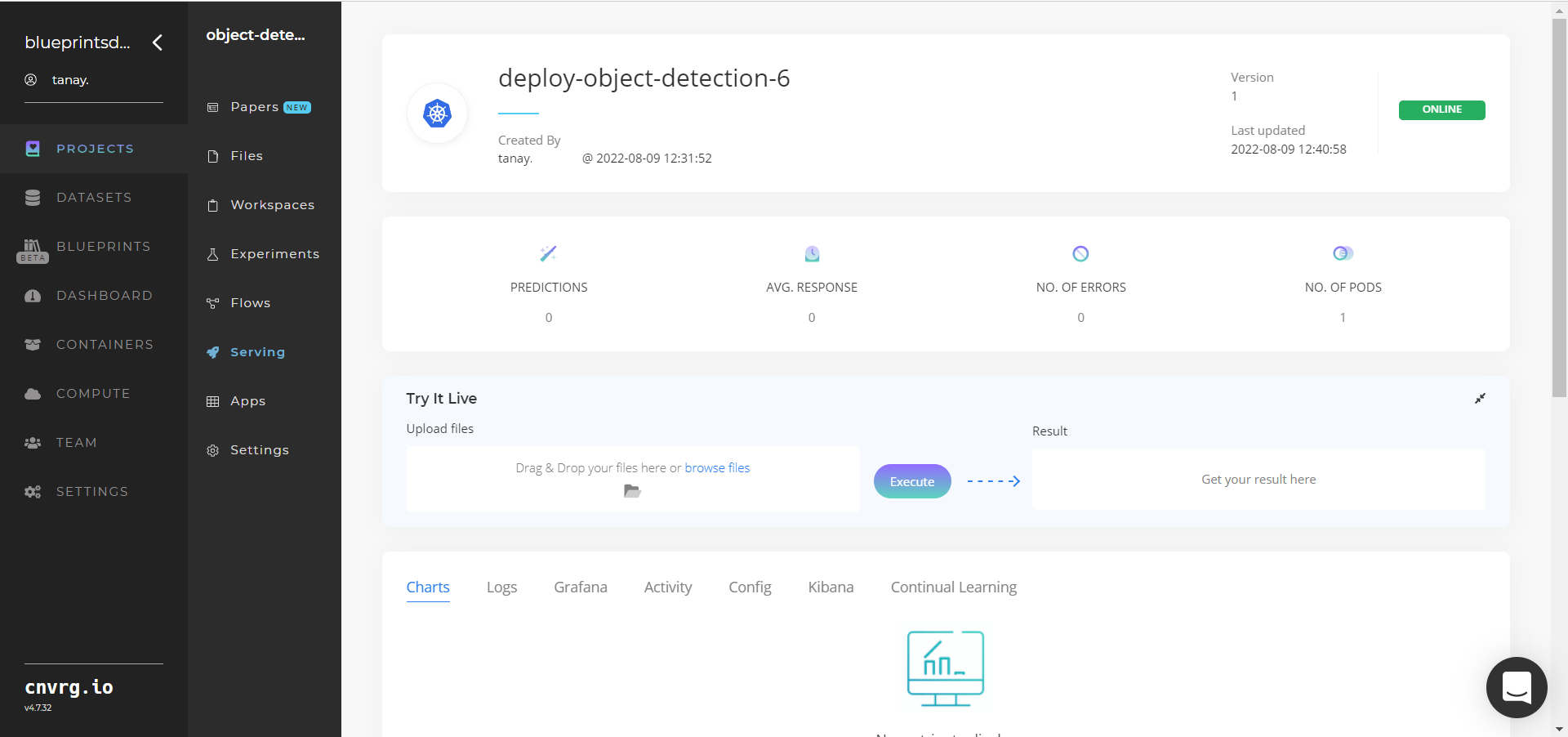

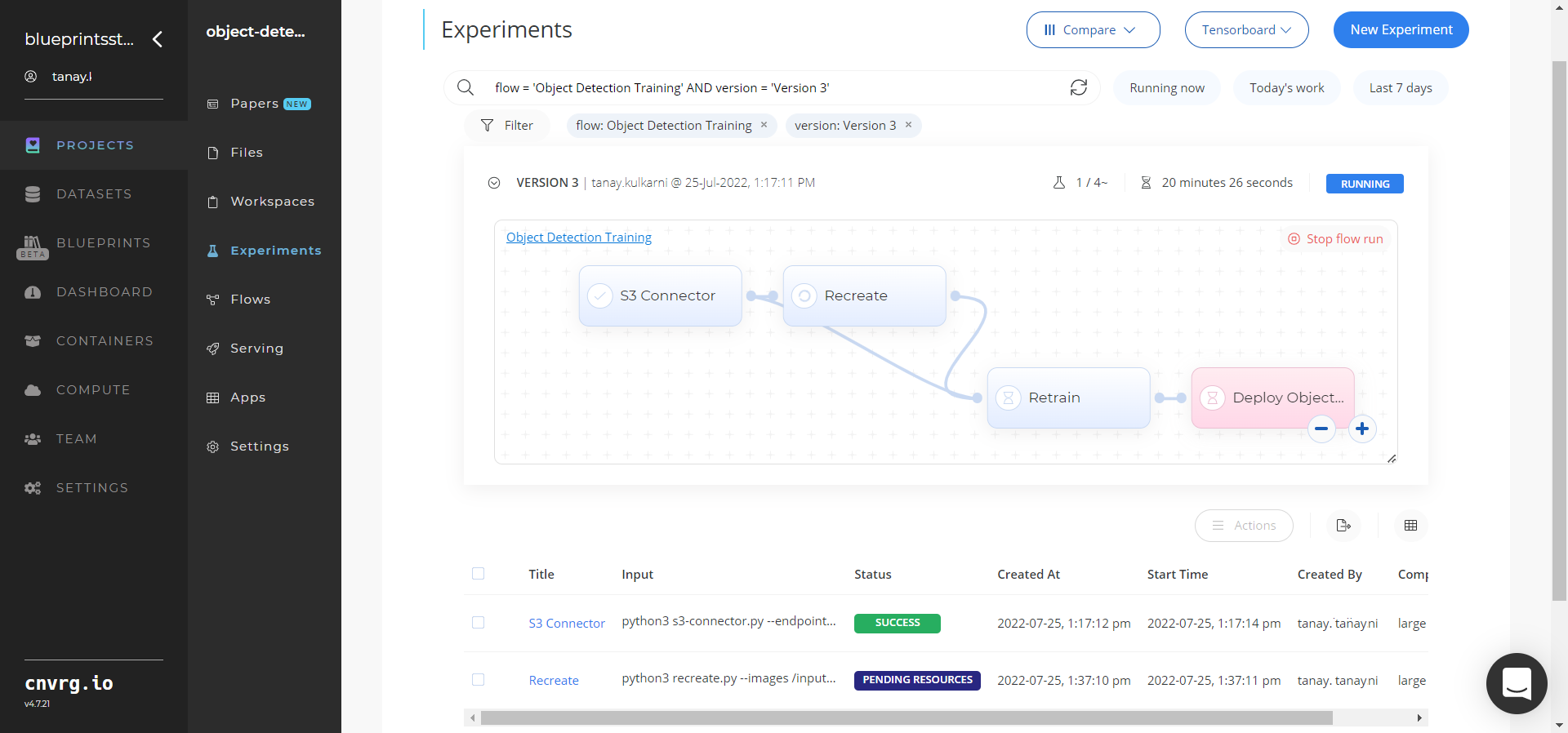

The cnvrg software launches the training blueprint as set of experiments, generating a trained object-detector model and deploying it as a new API endpoint.

The cnvrg software launches the training blueprint as set of experiments, generating a trained object-detector model and deploying it as a new API endpoint.

NOTE

The time required for model training and endpoint deployment depends on the size of the training data, the compute resources, and the training parameters.

For more information on cnvrg endpoint deployment capability, see cnvrg Serving.

- Track the blueprint's real-time progress in its Experiments page, which displays artifacts such as logs, metrics, hyperparameters, and algorithms.

- Click the Serving tab in the project and locate your endpoint.

- Complete one or both of the following options:

- Use the Try it Live section with any object-containing image to check the model.

- Use the bottom integration panel to integrate your API with your code by copying in your code snippet.

- Use the Try it Live section with any object-containing image to check the model.

A custom model and API endpoint which can detect objects in images have now been trained and deployed. For information on this blueprint's software version and release details, click here.

# Connected Libraries

Refer to the following libraries connected to this blueprint:

# Related Blueprints

Refer to the following blueprints related to this training blueprint:

- Object Detection Inference

- Object Detection Batch

- Scene Detection Train

- Scene Detection Inference