# MetaGPU - deprecated from 08/2024

MetaGPU enables GPU sharing between multiple Kubernetes workloads, thus optimizing the use of existing resources and reducing operating costs.

With MetaGPU, each GPU machine in your cluster can be divided into 100 MetaGPU units that can be dynamically allocated to different jobs as needed.

This document outlines a guide for installing MetaGPU on your Kubernetes cluster, accompanied by detailed instructions to verify that all components are functioning as intended.

See also the MetaGPU Overview

# Enable

Before proceeding, ensure that the following prerequisites are met:

Kubernetes cluster version > 1.24

Nodegroup with NVIDIA GPU instances ?

kubectl tool installed and kubeconfig with cluster access is available

cnvrg app version > v4.6.0 It is displayed in the bottom left corner of the cnvrg UI, but you can also run this command to check:

kubectl -n cnvrg get cnvrgappcnvrg operator version > 4.0.0 You can check the current version by running the following command:

kubectl -n cnvrg get deploy cnvrg-operator -o yaml | grep "image: "helm tool (required for manual installation only)

# Installation

In order to enable MetaGPU, you will have to install the MetaGPU Device Plugin for Kubernetes in the cluster that you plan to run your GPU workloads.

- Edit the cnvrginfra CRD:

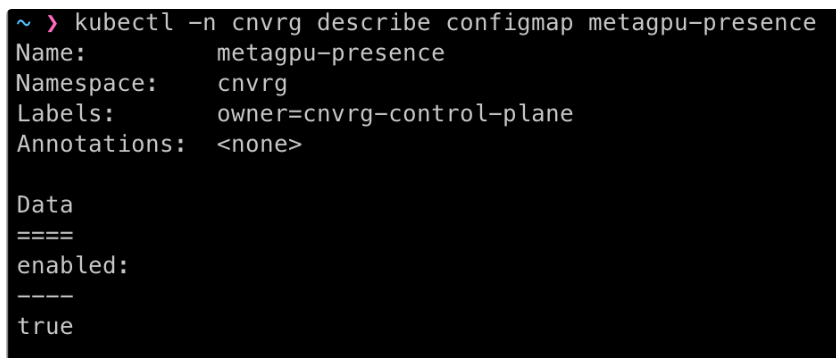

kubectl -n cnvrg describe configmap MetaGPU-presence

You should see enabled: true under Data section:

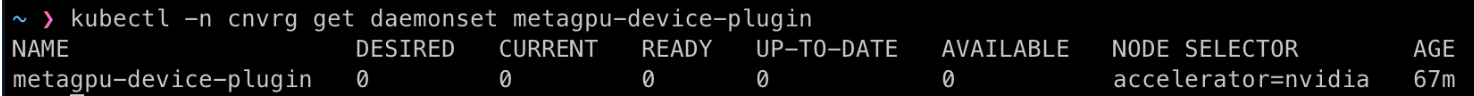

- Verify the device plugin daemonSet exists:

kubectl -n cnvrg get daemonset metagpu-device-plugin

- Ensure the GPU node is properly labeled and tainted as required:

kubectl label node GPU_NODE accelerator=nvidia

kubectl taint node GPU_NODE cnvrg.io/metagpu=present:NoSchedule

kubectl taint node GPU_NODE nvidia.com/gpu=present:NoSchedule

This can be also done on the nodegroup level, to ensure any newly created/scaled nodes contain the same labels and taints. The application method varies between cloud provider and k8s distribution.

OPTIONAL: Installing MetaGPU device plugin manually outside of cnvrg

While MetaGPU can be enabled directly from cnvrg, the device plugin itself can also be installed manually and independently outside of cnvrg context. This will allow you to run independent fractional GPU workloads.

- Clone the MetaGPU git repo:

git clone https://github.com/cnvrg/MetaGPU.git

- Navigate to the helm chart folder:

cd MetaGPU/chart

- Install the helm chart, set ocp=true if installing on an OpenShift cluster

helm install metagpu . --set ocp=false

- Ensure the GPU node is properly labeled and tainted as required:

kubectl label node GPU_NODE accelerator=nvidia

kubectl taint node GPU_NODE cnvrg.io/metagpu=present:NoSchedule

kubectl taint node GPU_NODE nvidia.com/gpu=present:NoSchedule

After the helm installation is complete, the MetaGPU device plugin daemonSet will deploy a pod on each GPU node, which will allow you to request the resource cnvrg.io/metagpu in your deployments.

Warning Each GPU is split to 100 MetaGPU units. When requesting manually, state in your deployments resource request:

cnvrg.io/metagpu: 50for half of a GPU, for example.

# How to use

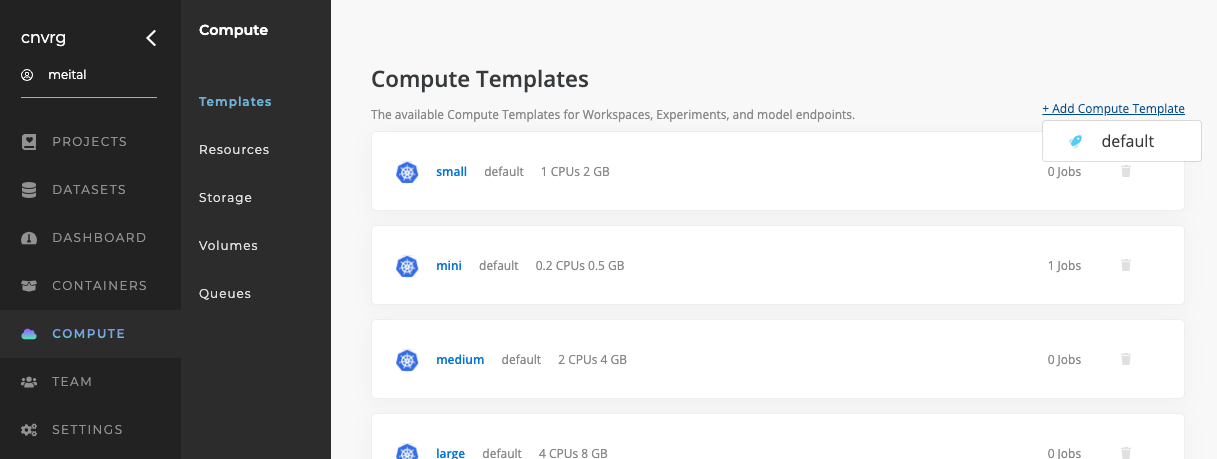

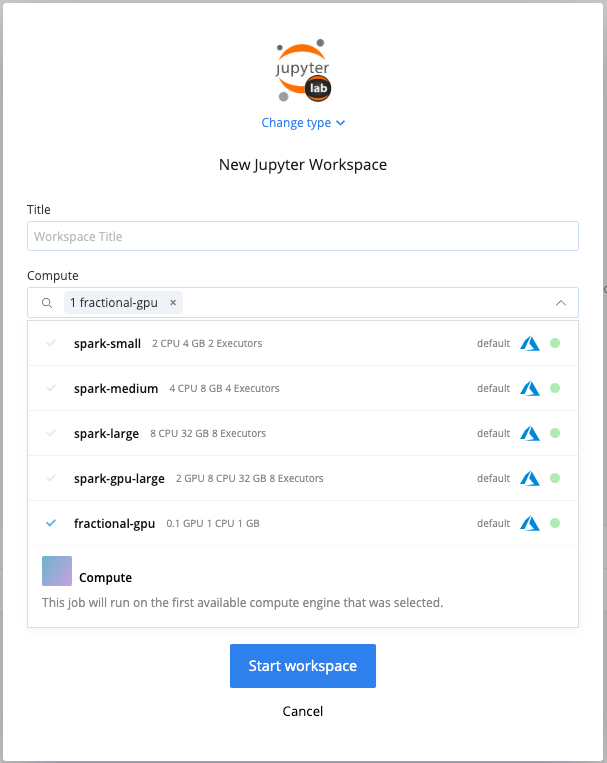

Now that MetaGPU is enabled, you can go ahead and create a custom compute template with fractional GPU specification:

- Navigate to the Compute tab → Templates → Add Compute Template → Choose the cluster you’ve enabled MetaGPU on.

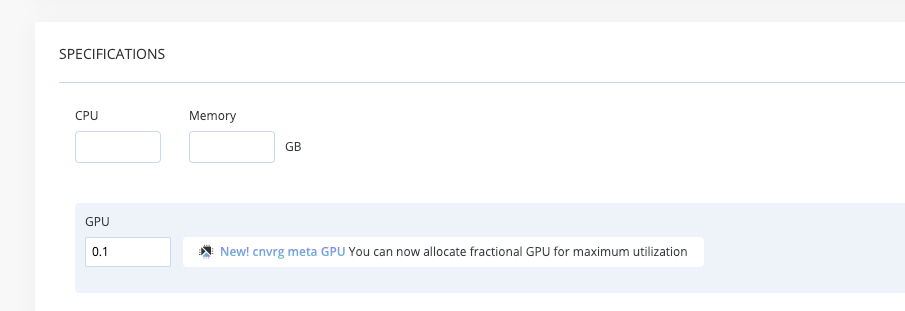

- Provide all needed specifications, for GPU you can now input a fractional value. Enter a number with a single decimal place, such as 0.5. You may also input values greater than 1, for example, 1.5

Note: If you’re not able to specify fractions of GPU, MetaGPU installation did not succeed. please review previous steps or contact support.

- The compute template is versatile and can be used with any cnvrg job:

Please be advised that in cases where fractional GPU workloads are executed, it is probable to overcommit and surpass the allocated portion of GPU utilization.

To illustrate, assuming a workspace runs on a compute template that has a 0.5 GPU value, it is possible for its running process to utilize the entire GPU capacity if no other consumers are utilizing it.

# Release notes

Supported on:

Environments:

- AKS

- EKS

- On-premise

- GKE & openshift - pending QA approval

# Additional Resources

# FAQ

???