# Determine the Value of Pi using Spark

This tutorial is designed to show you how to get started using Spark in cnvrg. Following this tutorial will also give you insights into some of the advanced features of cnvrg.

We will be specifically covering the following features of cnvrg:

- Editing code from the Files tab

- Running a grid search

# About Pi

This project uses example code created by Apache to help demonstrate Spark. It uses Spark and map/reduce to approximate the a value of Pi.

# Getting the project started

On the website, navigate to the Projects tab.

Welcome to the home of your code, experiments, flows and deployments. Here everything lives and works together.

For this example, we’ll use the prebuilt example project. On the top right, click Example Projects.

Select Compute Pi using Spark and Python.

Now you’ve created a cnvrg project titled pi. The pi project dashboard is displayed. Let’s have a closer look at what’s inside the project and files.

# Model tracking and visualization

One of cnvrg’s great features is Research Assistant. Using a simple print to stdout, Research Assistant lets cnvrg automatically track any metrics you want. You can then easily compare experiments and models later on.

The format is as follows:

(‘<cnrvg_tag_NAME>’, keyvalue)

Research Assistant will automatically keep track of these and present them to you for easy comprehension. To get a better understanding, we can open the python file to see it ourselves.

- Click the Files tab inside the pi project.

- Click

pi.pyto open up the code.

Great! Now the code for the Spark program will be in front of you.

As you can see on lines 37 and 46, this file already has the print statements for tracking the main parameters of the experiment:

print('cnvrg_tag_partitions:', partitions)

...

print("cnvrg_tag_PiValue value: %f" % (4.0 * count / n))

The above lines will keep track of the amount of partitions used, as well as the PiValue our experiment finds.

# Adding grid search support

Because we will want to see the effect of amount of partitions on the value of Pi, we're going to need to run multiple experiments for different amounts of partitions. Thankfully, built in support for grid searching in cnvrg makes this easy.

To get started, we'll first need to update the code slightly. All that is needed for grid searching, is to ensure we can parse the different parameters from the command line and use them as variables in the code.

The package we recommend using in Python is argparse.

Make sure you are still on the code editor for pi.py. Then:

- Add

import argparseto the top of the file. - Delete line 36.

- Add the following code at line 36:

parser = argparse.ArgumentParser(description='Approximating Pi') parser.add_argument('--partitions', help='number of partitions', default='2') args = parser.parse_args() partitions = int(args.partitions) - Add a commit message: "Added support for grid search using argparse".

- Save the file by pressing Submit.

Congratulations! You can now use this code to find Pi and run it in a grid search!

# Experiments

Experiments are the core of every machine learning project. When building a model, it’s all about trying new ideas, testing new hypotheses, testing hyperparameters, and exploring different neural-network architecture.

At cnvrg, we help you to experiment 10x faster and get everything 100% reproducible and trackable, so you can focus on the important stuff.

An experiment is basically a “run” of a script, locally or remotely. Usually, to run an experiment on a remote GPU, you would have to handle a lot before getting the actual results, and that includes: getting data, code, dependencies on the machine, SSH back-and-forth to see what’s new, and more. cnvrg completely automates that, and allows you to run an experiment with a single click.

# Run a grid search

Let's run a grid search for a few different values of partitions.

On your project’s sidebar, click Experiments, then click New Experiment. In the panel that appears:

- For Command to Execute, type

spark-submit pi.py. - Click on the Parameters subsection. We will now the values for

partitions.- Type: Discrete (the default option)

- Key: partitions

- Values: 2,8,16

- For Environment > Compute, select

spark-medium. - For Environment > Image, click cnvrg_spark and choose the latest cnrvg Spark image.

- Click Submit.

TIP

Notice that we made sure to choose a compatible compute template and image that support running Spark jobs.

cnvrg will set up 3 discrete experiments and run them all using the hyperparameters as entered. The list of the initializing experiments will load.

If you click on one of the experiments, you will be taken to a dashboard for monitoring its progress. You'll notice that the experiment is starting up. cnvrg is doing all of the DevOps work and getting the compute and environment ready. It might take a few minutes but on the experiment page you can follow its progress live. You can view the logs to track the experiment live.

You should also notice towards the top, our two cnvrg tags being tracked. When the experiment is finished, you'll see the calculated value for Pi here. It will be next to ValuePi.

# Spark UI

All jobs that run on Spark have a special Spark UI. This dashboard allows you to monitor the health and usage of the master and executor nodes in the spark cluster.

You can view it from one of the running experiments. It is only live when the experiment and Spark session is running, so if you have no experiments running, you may need to run another one.

To view the Spark UI:

- Go to a live Spark experiment.

- Click

Menuat the top of the page. - From the menu, click

Spark Jobs UI. A new tab will open with the Spark UI.

Have a look around the Spark UI to get yourself affiliated with all the cool information it contains.

# Visualizing and comparing

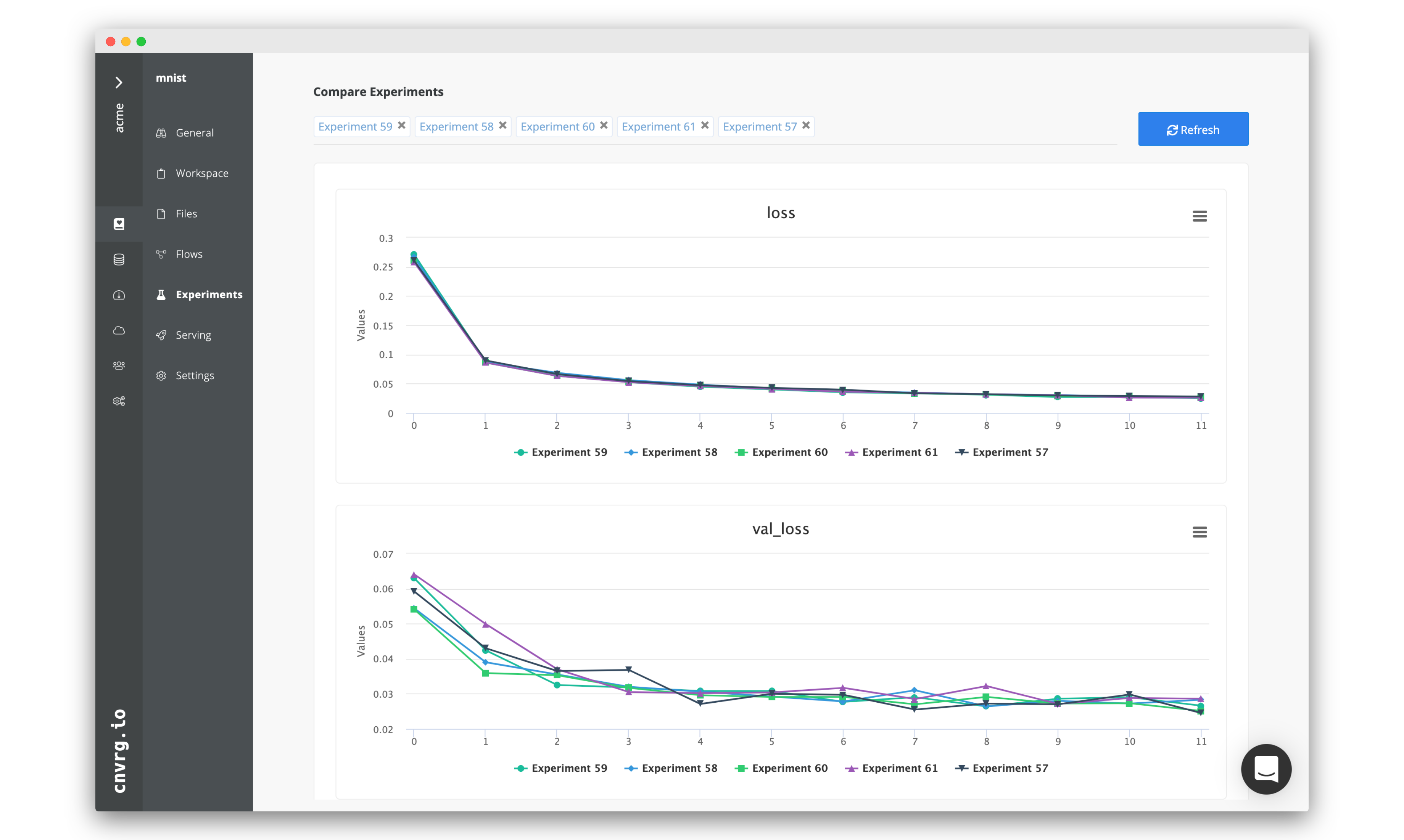

After all of our experiments have run successfully, we can now compare and choose the best performing model. Cnvrg makes this really easy with the built-in visualization and comparison tools.

Navigate back to the Experiments page and select all the experiments we just ran as part of the grid search. At the top, click Actions, then select Compare in the drop-down menu.

Here we can see all the results and metrics of our experiments beautifully displayed together. It makes it easier to analyze the separate experiments. Have a look at the different values for Pi that were approximated by the different experiments. Which one was closest?

If we had built a ML model, this comparison can be really useful for identifying the best experiment and model!

# Conclusion

In this tutorial, we managed to run 3 different experiments approximating the value of Pi using Spark running on Kubernetes! You can see for yourself how easy it was.

Make sure to check out some of our other tutorials to learn more about cnvrg using different use cases.