# Advanced Model Tracking in Pytorch Lightning

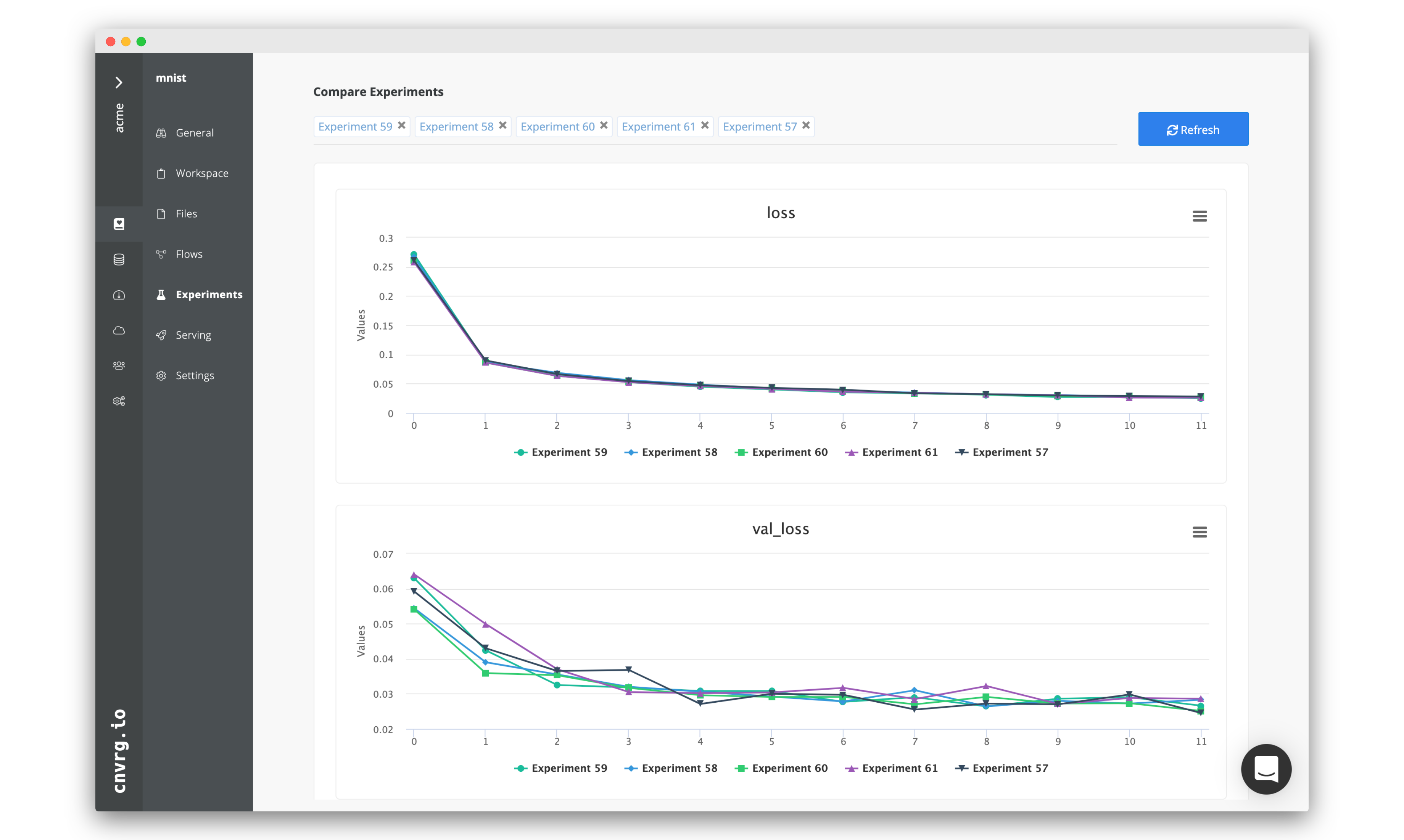

cnvrg.io provides an easy way to track various metrics when training and developing machine learning models.

In the following guide we will create a custom Logger that will be used with the Pytorch Lighning package to track and visualize training metrics.

# Create and Use cnvrg Logger

Pytorch Lightning supports custom loggers that can automatically create logs and metrics in your experiments

# Add the cnvrg callback to your project

Save the following code as a file in your project - cnvrglogger.py

from pytorch_lightning.utilities import rank_zero_only

from pytorch_lightning.loggers import LightningLoggerBase

from pytorch_lightning.loggers.base import rank_zero_experiment

from cnvrg import Experiment

class CNVRGLogger(LightningLoggerBase):

def __init__(self, ):

super().__init__()

self._experiment = Experiment()

@property

def name(self):

return 'CNVRGLogger'

@property

@rank_zero_experiment

def experiment(self):

return self._experiment

@property

def version(self):

# Return the experiment version, int or str.

return '0.1'

@rank_zero_only

def log_hyperparams(self, params):

pass

@rank_zero_only

def log_metrics(self, metrics, step):

e = self._experiment

for m in metrics:

e.log_metric(m, metrics[m], step)

@rank_zero_only

def save(self):

# Optional. Any code necessary to save logger data goes here

# If you implement this, remember to call `super().save()`

# at the start of the method (important for aggregation of metrics)

super().save()

@rank_zero_only

def finalize(self, status):

# Optional. Any code that needs to be run after training

# finishes goes here

pass

In your training code, import the cnvrg logger and set it in the pytorch lightning initializer:

from cnvrglogger import CNVRGLogger

cnvrg_logger = CNVRGLogger()

# Initialize the logger inside the trainer

trainer = pl.Trainer(gpus=1, max_epochs=2, progress_bar_refresh_rate=20, logger=[cnvrg_logger])

In your training step, you can use the log function to log metrics and it will automatically log metrics in your experiment:

def training_step(self, batch, batch_nb):

x, y = batch

loss = F.cross_entropy(self(x), y)

self.log('loss', loss)

return loss