# How to use Spark on Kubernetes with cnvrg

# About this guide

The following guide was written to help you get to know the cnvrg platform and to show you how to launch a Spark session on a Kubernetes cluster, with a Jupyter workspace.

# Create a new project and launch a workspace

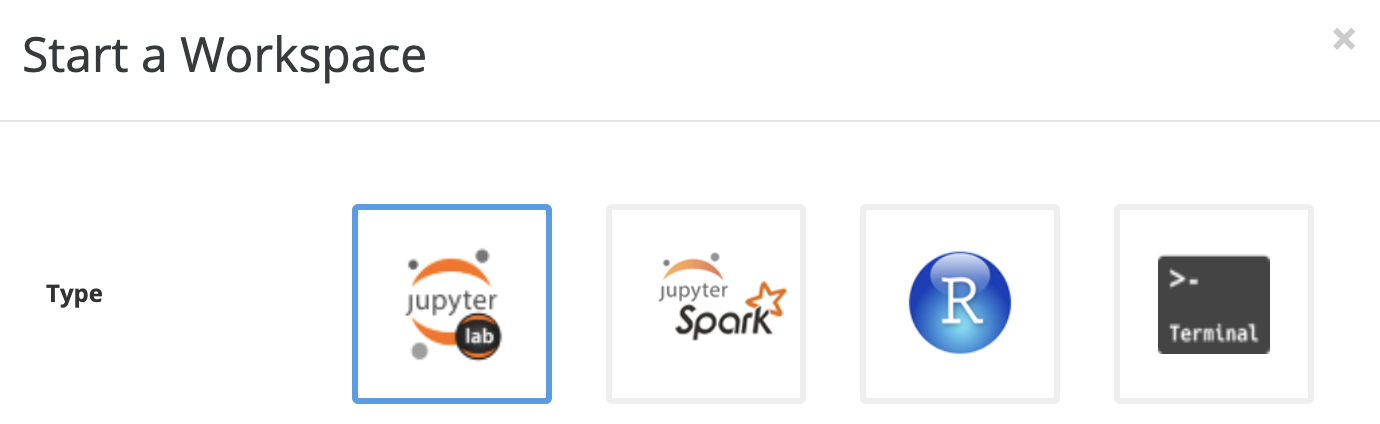

Go to your new project, and click Launch a Workspace. You will be prompted with a window to select different workspace settings, like: Compute, Environment, Dataset, and more. Make sure you're choosing Spark environment and a relevant compute. Click Start and you're good to go.

TIP

You can do the same for Experiments and Flows in cnvrg, with also the added ability to schedule the runs

# Test Spark is available

Now that we have a running Jupyter session with Spark preconfigured and ready-to-go, we'll use the following code sample to test it live:

import pyspark

import random

sc = pyspark.SparkContext.getOrCreate()

num_samples = 1000

def inside(p):

x, y = random.random(), random.random()

return x*x + y*y < 1

count = sc.parallelize(range(0, num_samples)).filter(inside).count()

pi = 4 * count / num_samples

print(pi)

sc.stop()