# Add Node Pools to an Azure AKS Cluster

Different ML workloads need different compute resources. Sometimes, 2 CPUs is enough, but other times you need 2 GPUs. With the benefit of Kubernetes, you can have multiple node pools, each containing different types of instances/machines. With the addition of auto-scaling, you can make sure they are only live when they are being used.

This is very useful when setting up a Workers cluster for running your cnvrg jobs. In this guide we will explain how to add extra node pools to an existing Azure AKS cluster.

In this guide, you will learn how to:

- Create new node pools in your existing AKS cluster using the azure command tools.

# Prerequisites: prepare your local environment

Before you can complete the installation you must install and prepare the following dependencies on your local machine:

# Install aks-preview

To be able to use auto-scaling, we will need to install the aks-preview. Install it with the following commands:

# Install the aks-preview extension

az extension add --name aks-preview

# Update the extension to make sure you have the latest version installed

az extension update --name aks-preview

# Enable Support for Multiple Node Pools

To enable support for multiple node pools in your AKS cluster, use the following command.

az feature register --name MultiAgentpoolPreview --namespace Microsoft.ContainerService

NOTE

Enabling the feature can take up to 15 minutes. You can check the status by running the following command. When the status changes from Registering to Registered, the process has finished.

az feature list -o table --query "[?contains(name, 'Microsoft.ContainerService/MultiAgentpoolPreview')].{Name:name,State:properties.state}"

# Create the Node Pools

The final step is to create the node pools that you want. cnvrg can leverage all different machine types, both CPUs and GPUs. Below are templates for CPU and GPU node pools. In each of the commands, you can customize:

--name--node-vm-size--node-count--min-count--max-count

The command may take a few minutes to finish running, but afterwards the node pool will have been added to your cluster.

# Create a node pool with CPU nodes

Use the following command to create the new CPU node pool. Fill in the variables at the beginning with the correct information for your cluster:

CLUSTER_NAME=<cluster-name>

RESOURCE_GROUP=<group_name>

az aks nodepool add --name cpu \

--resource-group ${RESOURCE_GROUP} \

--cluster-name ${CLUSTER_NAME} \

--node-vm-size Standard_D8s_v3 \

--node-count 1 \

--min-count 1 \

-–max-count 5

TIP

You can change Standard_D8s_v3 to any CPU machine available in your chosen region.

# Create a node pool with GPU nodes

Use the following command to create the new GPU node pool. Fill in the variables at the beginning with the correct information for your cluster:

CLUSTER_NAME=<cluster-name>

RESOURCE_GROUP=<group_name>

az aks nodepool add --name gpu \

--resource-group ${RESOURCE_GROUP} \

--cluster-name ${CLUSTER_NAME} \

--node-vm-size Standard_NC12s_v3 \

--node-taints nvidia.com/gpu=present:NoSchedule \

--labels accelerator=nvidia \

--node-count 1 \

--min-count 1 \

-–max-count 5

TIP

You can change Standard_NC12s_v3 to any GPU machine available in your chosen region.

# Match the autoscaler's version with your Kubernetes version

You must ensure the version of your autoscaler and the version of your Kubernetes match.

First, check which Kubernetes version you cluster is running on. You can find this with the kubectl version command. It will return the client version and server version. Check the output to find the server Major and Minor version. The output will look like this:

Client Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.5", GitCommit:"20c265fef0741dd71a66480e35bd69f18351daea", GitTreeState:"clean", BuildDate:"2019-10-15T19:16:51Z", GoVersion:"go1.12.10", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.11", GitCommit:"ec831747a3a5896dbdf53f259eafea2a2595217c", GitTreeState:"clean", BuildDate:"2020-05-29T19:56:10Z", GoVersion:"go1.12.17", Compiler:"gc", Platform:"linux/amd64"}

In this example, the version of Kubernetes my cluster is running on is 1.15.

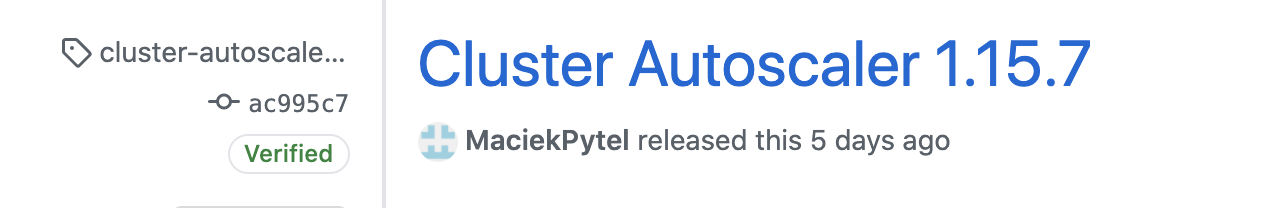

Now, go to this page and find the latest release for your major and minor version of Kubernetes. In this example, it is 1.15.7.

Finally, run the following command, customized with the latest release of the autoscaler for your Kubernetes version:

kubectl -n kube-system set image deployment.apps/cluster-autoscaler cluster-autoscaler=us.gcr.io/k8s-artifacts-prod/autoscaling/cluster-autoscaler:vX.Y.Z

For our example, it would be:

kubectl -n kube-system set image deployment.apps/cluster-autoscaler cluster-autoscaler=us.gcr.io/k8s-artifacts-prod/autoscaling/cluster-autoscaler:v1.15.7

Examples for other versions of Kubernetes:

kubectl -n kube-system set image deployment.apps/cluster-autoscaler cluster-autoscaler=us.gcr.io/k8s-artifacts-prod/autoscaling/cluster-autoscaler:v1.16.6

kubectl -n kube-system set image deployment.apps/cluster-autoscaler cluster-autoscaler=us.gcr.io/k8s-artifacts-prod/autoscaling/cluster-autoscaler:v1.17.3

kubectl -n kube-system set image deployment.apps/cluster-autoscaler cluster-autoscaler=us.gcr.io/k8s-artifacts-prod/autoscaling/cluster-autoscaler:v1.18.2

kubectl -n kube-system set image deployment.apps/cluster-autoscaler cluster-autoscaler=us.gcr.io/k8s-artifacts-prod/autoscaling/cluster-autoscaler:v1.19.0

WARNING

The above example commands may not be updated. Ensure you check here to find the latest release for your version of Kubernetes.

# Conclusion

The node pools will now have been added to your cluster. You can add more by following the relevant instructions. If you already have deployed cnvrg to the cluster, or added the cluster as a compute resource inside cnvrg, you will not need to do any more setup and the node pools will immediately be usable by cnvrg.